Operations Manual

Copyright © 2010, 2011 Oracle and/or its affiliates. (The original version of this Operations Manual without the Intel modifications.)

Copyright © 2011, 2017 Intel Corporation. (Intel modifications to the original version of this Operations Manual.)

Important Notice from Intel

INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT.

A "Mission Critical Application" is any application in which failure of the Intel Product could result, directly or indirectly, in personal injury or death. SHOULD YOU PURCHASE OR USE INTEL'S PRODUCTS FOR ANY SUCH MISSION CRITICAL APPLICATION, YOU SHALL INDEMNIFY AND HOLD INTEL AND ITS SUBSIDIARIES, SUBCONTRACTORS AND AFFILIATES, AND THE DIRECTORS, OFFICERS, AND EMPLOYEES OF EACH, HARMLESS AGAINST ALL CLAIMS COSTS, DAMAGES, AND EXPENSES AND REASONABLE ATTORNEYS' FEES ARISING OUT OF, DIRECTLY OR INDIRECTLY, ANY CLAIM OF PRODUCT LIABILITY, PERSONAL INJURY, OR DEATH ARISING IN ANY WAY OUT OF SUCH MISSION CRITICAL APPLICATION, WHETHER OR NOT INTEL OR ITS SUBCONTRACTOR WAS NEGLIGENT IN THE DESIGN, MANUFACTURE, OR WARNING OF THE INTEL PRODUCT OR ANY OF ITS PARTS.

Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or characteristics of any features or instructions marked "reserved" or "undefined". Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. The information here is subject to change without notice. Do not finalize a design with this information.

The products described in this document may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request.

Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order.

Copies of documents which have an order number and are referenced in this document, or other Intel literature, may be obtained by calling 1-800-548-4725, or go to: https://www.intel.com/content/www/us/en/design/resource-design-center.html

Intel and the Intel logo are trademarks of Intel Corporation in the U.S. and/or other countries. Lustre is a registered trademark of Oracle Corporation.

*Other names and brands may be claimed as the property of others.

THE ORIGINAL LUSTRE 2.x FILESYSTEM: OPERATIONS MANUAL HAS BEEN MODIFIED: THIS OPERATIONS MANUAL IS A MODIFIED VERSION OF, AND IS DERIVED FROM, THE LUSTRE 2.0 FILESYSTEM: OPERATIONS MANUAL PUBLISHED BY ORACLE AND AVAILABLE AT [http://www.lustre.org/]. MODIFICATIONS (collectively, the “Modifications”) HAVE BEEN MADE BY INTEL CORPORATION (“Intel”). ORACLE AND ITS AFFILIATES HAVE NOT REVIEWED, APPROVED, SPONSORED, OR ENDORSED THIS MODIFIED OPERATIONS MANUAL, OR ENDORSED INTEL, AND ORACLE AND ITS AFFILIATES ARE NOT RESPONSIBLE OR LIABLE FOR ANY MODIFICATIONS THAT INTEL HAS MADE TO THE ORIGINAL OPERATIONS MANUAL.

NOTHING IN THIS MODIFIED OPERATIONS MANUAL IS INTENDED TO AFFECT THE NOTICE PROVIDED BY ORACLE BELOW IN RESPECT OF THE ORIGINAL OPERATIONS MANUAL AND SUCH ORACLE NOTICE CONTINUES TO APPLY TO THIS MODIFIED OPERATIONS MANUAL EXCEPT FOR THE MODIFICATIONS; THIS INTEL NOTICE SHALL APPLY ONLY TO MODIFICATIONS MADE BY INTEL. AS BETWEEN YOU AND ORACLE: (I) NOTHING IN THIS INTEL NOTICE IS INTENDED TO AFFECT THE TERMS OF THE ORACLE NOTICE BELOW; AND (II) IN THE EVENT OF ANY CONFLICT BETWEEN THE TERMS OF THIS INTEL NOTICE AND THE TERMS OF THE ORACLE NOTICE, THE ORACLE NOTICE SHALL PREVAIL.

Your use of any Intel software shall be governed by separate license terms containing restrictions on use and disclosure and are protected by intellectual property laws.

The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing.

This work is licensed under a Creative Commons Attribution-Share Alike 3.0 United States License. To view a copy of this license and obtain more information about Creative Commons licensing, visit Creative Commons Attribution-Share Alike 3.0 United States or send a letter to Creative Commons, 171 2nd Street, Suite 300, San Francisco, California 94105, USA.

Important Notice from Oracle

This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited.

The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing.

If this is software or related software documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, the following notice is applicable:

U.S. GOVERNMENT RIGHTS. Programs, software, databases, and related documentation and technical data delivered to U.S. Government customers are "commercial computer software" or "commercial technical data" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, duplication, disclosure, modification, and adaptation shall be subject to the restrictions and license terms set forth in the applicable Government contract, and, to the extent applicable by the terms of the Government contract, the additional rights set forth in FAR 52.227-19, Commercial Computer Software License (December 2007). Oracle America, Inc., 500 Oracle Parkway, Redwood City, CA 94065.

This software or hardware is developed for general use in a variety of information management applications. It is not developed or intended for use in any inherently dangerous applications, including applications which may create a risk of personal injury. If you use this software or hardware in dangerous applications, then you shall be responsible to take all appropriate fail-safe, backup, redundancy, and other measures to ensure its safe use. Oracle Corporation and its affiliates disclaim any liability for any damages caused by use of this software or hardware in dangerous applications.

Oracle and Java are registered trademarks of Oracle and/or its affiliates. Other names may be trademarks of their respective owners.

AMD, Opteron, the AMD logo, and the AMD Opteron logo are trademarks or registered trademarks of Advanced Micro Devices. Intel and Intel Xeon are trademarks or registered trademarks of Intel Corporation. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. UNIX is a registered trademark licensed through X/Open Company, Ltd.

This software or hardware and documentation may provide access to or information on content, products, and services from third parties. Oracle Corporation and its affiliates are not responsible for and expressly disclaim all warranties of any kind with respect to third-party content, products, and services. Oracle Corporation and its affiliates will not be responsible for any loss, costs, or damages incurred due to your access to or use of third-party content, products, or services.

Copyright © 2011, Oracle et/ou ses affiliés. Tous droits réservés.

Ce logiciel et la documentation qui l’accompagne sont protégés par les lois sur la propriété intellectuelle. Ils sont concédés sous licence et soumis à des restrictions d’utilisation et de divulgation. Sauf disposition de votre contrat de licence ou de la loi, vous ne pouvez pas copier, reproduire, traduire, diffuser, modifier, breveter, transmettre, distribuer, exposer, exécuter, publier ou afficher le logiciel, même partiellement, sous quelque forme et par quelque procédé que ce soit. Par ailleurs, il est interdit de procéder à toute ingénierie inverse du logiciel, de le désassembler ou de le décompiler, excepté à des fins d’interopérabilité avec des logiciels tiers ou tel que prescrit par la loi.

Les informations fournies dans ce document sont susceptibles de modification sans préavis. Par ailleurs, Oracle Corporation ne garantit pas qu’elles soient exemptes d’erreurs et vous invite, le cas échéant, à lui en faire part par écrit.

Si ce logiciel, ou la documentation qui l’accompagne, est concédé sous licence au Gouvernement des Etats-Unis, ou à toute entité qui délivre la licence de ce logiciel ou l’utilise pour le compte du Gouvernement des Etats-Unis, la notice suivante s’applique :

U.S. GOVERNMENT RIGHTS. Programs, software, databases, and related documentation and technical data delivered to U.S. Government customers are "commercial computer software" or "commercial technical data" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, duplication, disclosure, modification, and adaptation shall be subject to the restrictions and license terms set forth in the applicable Government contract, and, to the extent applicable by the terms of the Government contract, the additional rights set forth in FAR 52.227-19, Commercial Computer Software License (December 2007). Oracle America, Inc., 500 Oracle Parkway, Redwood City, CA 94065.

Ce logiciel ou matériel a été développé pour un usage général dans le cadre d’applications de gestion des informations. Ce logiciel ou matériel n’est pas conçu ni n’est destiné à être utilisé dans des applications à risque, notamment dans des applications pouvant causer des dommages corporels. Si vous utilisez ce logiciel ou matériel dans le cadre d’applications dangereuses, il est de votre responsabilité de prendre toutes les mesures de secours, de sauvegarde, de redondance et autres mesures nécessaires à son utilisation dans des conditions optimales de sécurité. Oracle Corporation et ses affiliés déclinent toute responsabilité quant aux dommages causés par l’utilisation de ce logiciel ou matériel pour ce type d’applications.

Oracle et Java sont des marques déposées d’Oracle Corporation et/ou de ses affiliés.Tout autre nom mentionné peut correspondre à des marques appartenant à d’autres propriétaires qu’Oracle.

AMD, Opteron, le logo AMD et le logo AMD Opteron sont des marques ou des marques déposées d’Advanced Micro Devices. Intel et Intel Xeon sont des marques ou des marques déposées d’Intel Corporation. Toutes les marques SPARC sont utilisées sous licence et sont des marques ou des marques déposées de SPARC International, Inc. UNIX est une marque déposée concédée sous licence par X/Open Company, Ltd.

Ce logiciel ou matériel et la documentation qui l’accompagne peuvent fournir des informations ou des liens donnant accès à des contenus, des produits et des services émanant de tiers. Oracle Corporation et ses affiliés déclinent toute responsabilité ou garantie expresse quant aux contenus, produits ou services émanant de tiers. En aucun cas, Oracle Corporation et ses affiliés ne sauraient être tenus pour responsables des pertes subies, des coûts occasionnés ou des dommages causés par l’accès à des contenus, produits ou services tiers, ou à leur utilisation.

This work is licensed under a Creative Commons Attribution-Share Alike 3.0 United States License. To view a copy of this license and obtain more information about Creative Commons licensing, visit Creative Commons Attribution-Share Alike 3.0 United States or send a letter to Creative Commons, 171 2nd Street, Suite 300, San Francisco, California 94105, USA.

Table of Contents

- Preface

- I. Introducing the Lustre* File System

- II. Installing and Configuring Lustre

- 4. Installation Overview

- 5. Determining Hardware Configuration Requirements and Formatting Options

- 6. Configuring Storage on a Lustre File System

- 7. Setting Up Network Interface Bonding

- 8. Installing the Lustre Software

- 9. Configuring Lustre Networking (LNet)

- 9.1. Configuring LNet via

lnetctlL 2.7 - 9.1.1. Configuring LNet

- 9.1.2. Displaying Global Settings

- 9.1.3. Adding, Deleting and Showing Networks

- 9.1.4. Manual Adding, Deleting and Showing PeersL 2.10

- 9.1.5. Dynamic Peer DiscoveryL 2.11

- 9.1.6. Adding, Deleting and Showing routes

- 9.1.7. Enabling and Disabling Routing

- 9.1.8. Showing routing information

- 9.1.9. Configuring Routing Buffers

- 9.1.10. Asymmetrical RoutesL 2.13

- 9.1.11. Importing YAML Configuration File

- 9.1.12. Exporting Configuration in YAML format

- 9.1.13. Showing LNet Traffic Statistics

- 9.1.14. YAML Syntax

- 9.2. Overview of LNet Module Parameters

- 9.3. Setting the LNet Module networks Parameter

- 9.4. Setting the LNet Module ip2nets Parameter

- 9.5. Setting the LNet Module routes Parameter

- 9.6. Testing the LNet Configuration

- 9.7. Configuring the Router Checker

- 9.8. Best Practices for LNet Options

- 9.1. Configuring LNet via

- 10. Configuring a Lustre File System

- 11. Configuring Failover in a Lustre File System

- III. Administering Lustre

- 12. Monitoring a Lustre File System

- 13. Lustre Operations

- 13.1. Mounting by Label

- 13.2. Starting Lustre

- 13.3. Mounting a Server

- 13.4. Stopping the Filesystem

- 13.5. Unmounting a Specific Target on a Server

- 13.6. Specifying Failout/Failover Mode for OSTs

- 13.7. Handling Degraded OST RAID Arrays

- 13.8. Running Multiple Lustre File Systems

- 13.9. Creating a sub-directory on a specific MDT

- 13.10. Creating a directory striped across multiple MDTsL 2.8

- 13.11. Default Dir Stripe Policy

- 13.12. Setting and Retrieving Lustre Parameters

- 13.13. Specifying NIDs and Failover

- 13.14. Erasing a File System

- 13.15. Reclaiming Reserved Disk Space

- 13.16. Replacing an Existing OST or MDT

- 13.17. Identifying To Which Lustre File an OST Object Belongs

- 14. Lustre Maintenance

- 14.1. Working with Inactive OSTs

- 14.2. Finding Nodes in the Lustre File System

- 14.3. Mounting a Server Without Lustre Service

- 14.4. Regenerating Lustre Configuration Logs

- 14.5. Changing a Server NID

- 14.6. Clearing configurationL 2.11

- 14.7. Adding a New MDT to a Lustre File System

- 14.8. Adding a New OST to a Lustre File System

- 14.9. Removing and Restoring MDTs and OSTs

- 14.10. Aborting Recovery

- 14.11. Determining Which Machine is Serving an OST

- 14.12. Changing the Address of a Failover Node

- 14.13. Separate a combined MGS/MDT

- 14.14. Set an MDT to read-onlyL 2.13

- 14.15. Tune Fallocate for ldiskfsL 2.14

- 15. Managing Lustre Networking (LNet)

- 16. LNet Software Multi-RailL 2.10

- 17. Upgrading a Lustre File System

- 18. Backing Up and Restoring a File System

- 18.1. Backing up a File System

- 18.2. Backing Up and Restoring an MDT or OST (ldiskfs Device Level)

- 18.3. Backing Up an OST or MDT (Backend File System Level)

- 18.4. Restoring a File-Level Backup

- 18.5. Using LVM Snapshots with the Lustre File System

- 18.6. Migration Between ZFS and ldiskfs Target Filesystems L 2.11

- 19. Managing File Layout (Striping) and Free Space

- 19.1. How Lustre File System Striping Works

- 19.2. Lustre File Layout (Striping) Considerations

- 19.3. Setting the File Layout/Striping Configuration (

lfs setstripe) - 19.4. Retrieving File Layout/Striping Information (

getstripe) - 19.5. Progressive File Layout(PFL)L 2.10

- 19.6. Self-Extending Layout (SEL)L 2.13

- 19.7. Foreign LayoutL 2.13

- 19.8. Managing Free Space

- 19.9. Lustre Striping Internals

- 20. Data on MDT (DoM)L 2.11

- 21. Lazy Size on MDT (LSoM)L 2.12

- 22. File Level Redundancy (FLR)L 2.11

- 23. Managing the File System and I/O

- 24. Lustre File System Failover and Multiple-Mount Protection

- 25. Configuring and Managing Quotas

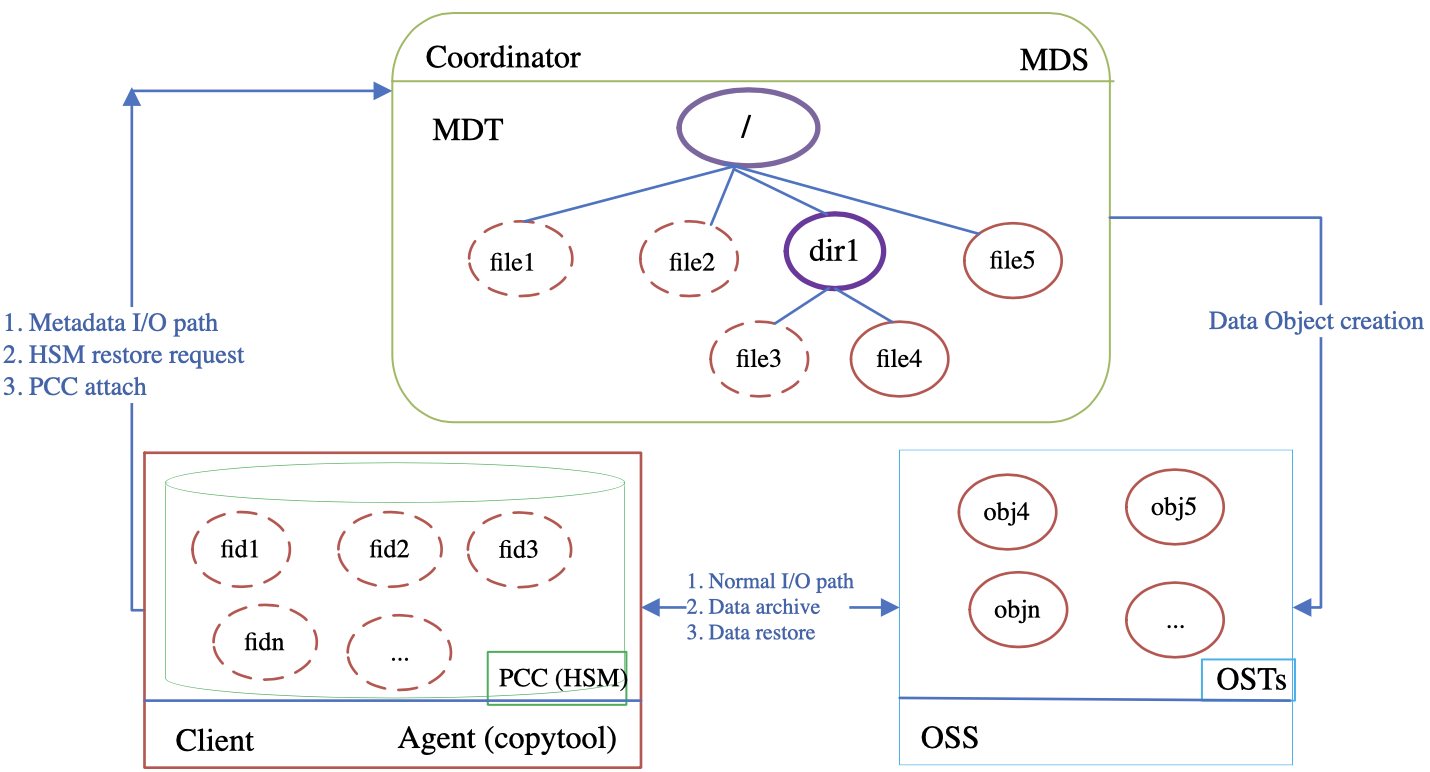

- 26. Hierarchical Storage Management (HSM)L 2.5

- 27. Persistent Client Cache (PCC)L 2.13

- 27.1. Introduction

- 27.2. Design

- 27.3. PCC Command Line Tools

- 27.3.1. Add a PCC backend on a client

- 27.3.2. Delete a PCC backend from a client

- 27.3.3. Remove all PCC backends on a client

- 27.3.4. List all PCC backends on a client

- 27.3.5. Attach given files into PCC

- 27.3.6. Attach given files into PCC by FID(s)

- 27.3.7. Detach given files from PCC

- 27.3.8. Detach given files from PCC by FID(s)

- 27.3.9. Display the PCC state for given files

- 27.4. PCC Configuration Example

- 28. Mapping UIDs and GIDs with NodemapL 2.9

- 29. Configuring Shared-Secret Key (SSK) SecurityL 2.9

- 30. Managing Security in a Lustre File System

- 31. Lustre ZFS SnapshotsL 2.10

- IV. Tuning a Lustre File System for Performance

- 32. Testing Lustre Network Performance (LNet Self-Test)

- 33. Benchmarking Lustre File System Performance (Lustre I/O Kit)

- 34. Tuning a Lustre File System

- 34.1. Optimizing the Number of Service Threads

- 34.2. Binding MDS Service Thread to CPU Partitions

- 34.3. Tuning LNet Parameters

- 34.4. libcfs Tuning

- 34.5. LND Tuning

- 34.6. Network Request Scheduler (NRS) Tuning

- 34.7. Lockless I/O Tunables

- 34.8. Server-Side Advice and Hinting L 2.9

- 34.9. Large Bulk IO (16MB RPC) L 2.9

- 34.10. Improving Lustre I/O Performance for Small Files

- 34.11. Understanding Why Write Performance is Better Than Read Performance

- V. Troubleshooting a Lustre File System

- 35. Lustre File System Troubleshooting

- 35.1. Lustre Error Messages

- 35.2. Reporting a Lustre File System Bug

- 35.3. Common Lustre File System Problems

- 35.3.1. OST Object is Missing or Damaged

- 35.3.2. OSTs Become Read-Only

- 35.3.3. Identifying a Missing OST

- 35.3.4. Fixing a Bad LAST_ID on an OST

- 35.3.5. Handling/Debugging "

Bind: Address already in use" Error - 35.3.6. Handling/Debugging Error "- 28"

- 35.3.7. Triggering Watchdog for PID NNN

- 35.3.8. Handling Timeouts on Initial Lustre File System Setup

- 35.3.9. Handling/Debugging "LustreError: xxx went back in time"

- 35.3.10. Lustre Error: "

Slow Start_Page_Write" - 35.3.11. Drawbacks in Doing Multi-client O_APPEND Writes

- 35.3.12. Slowdown Occurs During Lustre File System Startup

- 35.3.13. Log Message

'Out of Memory' on OST - 35.3.14. Setting SCSI I/O Sizes

- 36. Troubleshooting Recovery

- 37. Debugging a Lustre File System

- 37.1. Diagnostic and Debugging Tools

- 37.2. Lustre Debugging Procedures

- 37.2.1. Understanding the Lustre Debug Messaging Format

- 37.2.2. Using the lctl Tool to View Debug Messages

- 37.2.3. Dumping the Buffer to a File (

debug_daemon) - 37.2.4. Controlling Information Written to the Kernel Debug Log

- 37.2.5. Troubleshooting with

strace - 37.2.6. Looking at Disk Content

- 37.2.7. Finding the Lustre UUID of an OST

- 37.2.8. Printing Debug Messages to the Console

- 37.2.9. Tracing Lock Traffic

- 37.2.10. Controlling Console Message Rate Limiting

- 37.3. Lustre Debugging for Developers

- VI. Reference

- 38. Lustre File System Recovery

- 39. Lustre Parameters

- 39.1. Introduction to Lustre Parameters

- 39.2. Tuning Multi-Block Allocation (mballoc)

- 39.3. Monitoring Lustre File System I/O

- 39.4. Tuning Lustre File System I/O

- 39.5. Configuring Timeouts in a Lustre File System

- 39.6. Monitoring LNet

- 39.7. Allocating Free Space on OSTs

- 39.8. Configuring Locking

- 39.9. Setting MDS and OSS Thread Counts

- 39.10. Enabling and Interpreting Debugging Logs

- 40. User Utilities

- 41. Programming Interfaces

- 42. Setting Lustre Properties in a C Program (

llapi) - 43. Configuration Files and Module Parameters

- 44. System Configuration Utilities

- 44.1. l_getidentity

- 44.2. lctl

- 44.3. ll_decode_filter_fid

- 44.4. llobdstat

- 44.5. llog_reader

- 44.6. llstat

- 44.7. llverdev

- 44.8. lshowmount

- 44.9. lst

- 44.10. lustre_rmmod.sh

- 44.11. lustre_rsync

- 44.12. mkfs.lustre

- 44.13. mount.lustre

- 44.14. routerstat

- 44.15. tunefs.lustre

- 44.16. Additional System Configuration Utilities

- 45. LNet Configuration C-API

- 45.1. General API Information

- 45.2. The LNet Configuration C-API

- 45.2.1. Configuring LNet

- 45.2.2. Enabling and Disabling Routing

- 45.2.3. Adding Routes

- 45.2.4. Deleting Routes

- 45.2.5. Showing Routes

- 45.2.6. Adding a Network Interface

- 45.2.7. Deleting a Network Interface

- 45.2.8. Showing Network Interfaces

- 45.2.9. Adjusting Router Buffer Pools

- 45.2.10. Showing Routing information

- 45.2.11. Showing LNet Traffic Statistics

- 45.2.12. Adding/Deleting/Showing Parameters through a YAML Block

- 45.2.13. Adding a route code example

- Glossary

- Index

List of Figures

- 1.1. Lustre file system components in a basic cluster

- 1.2. Lustre cluster at scale

- 1.3. Layout EA on MDT pointing to file data on OSTs

- 1.4. Lustre client requesting file data

- 1.5. File striping on a Lustre file system

- 3.1. Lustre failover configuration for a active/passive MDT

- 3.2. Lustre failover configuration for a active/active MDTs

- 3.3. Lustre failover configuration for an OSTs

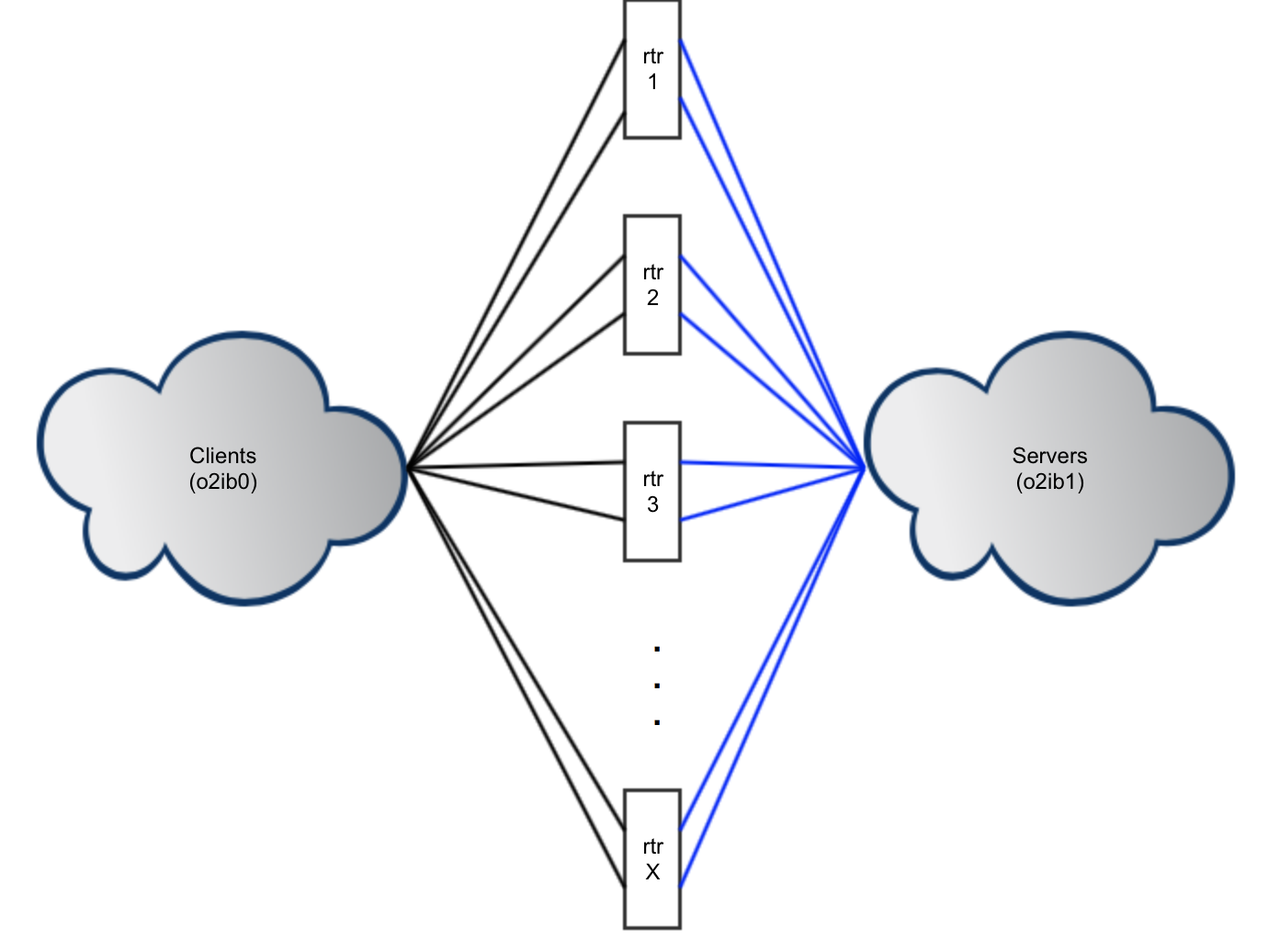

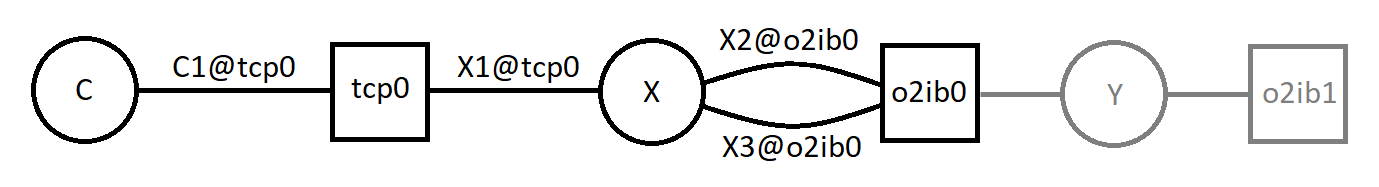

- 16.1. Routing Configuration with Multi-Rail

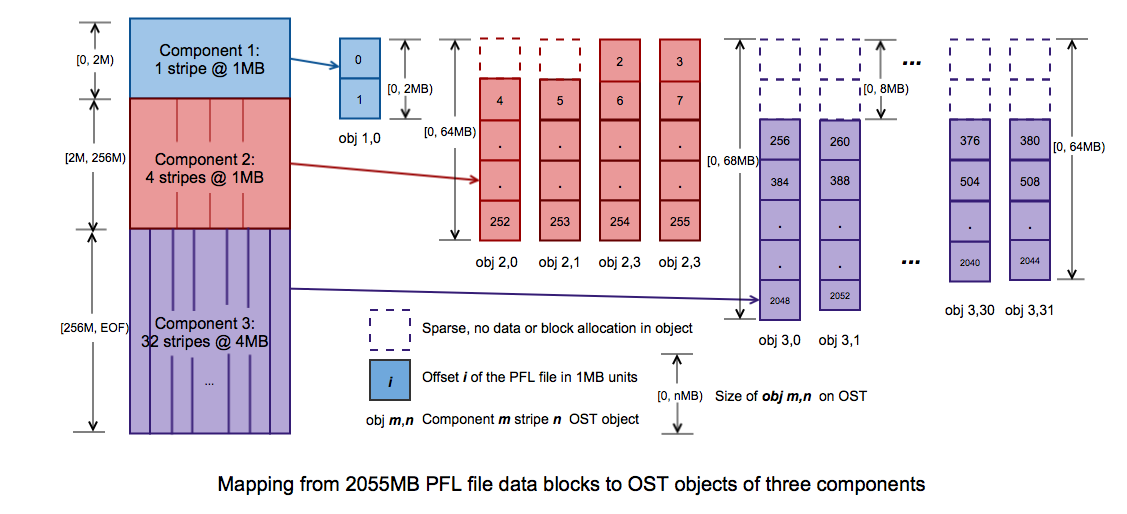

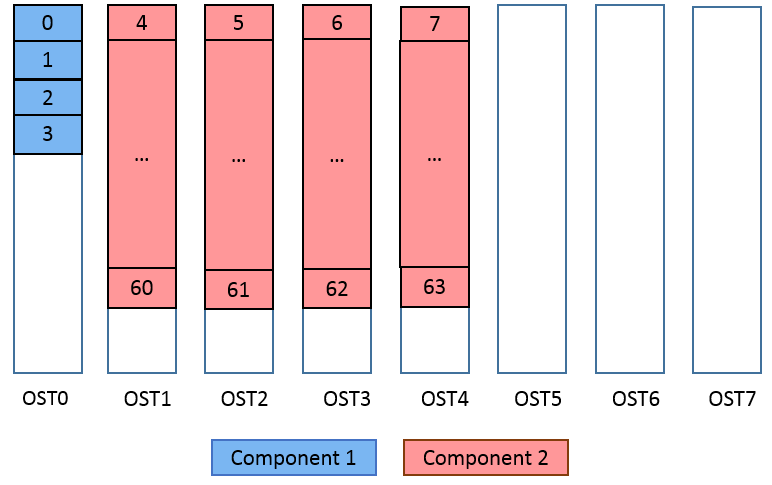

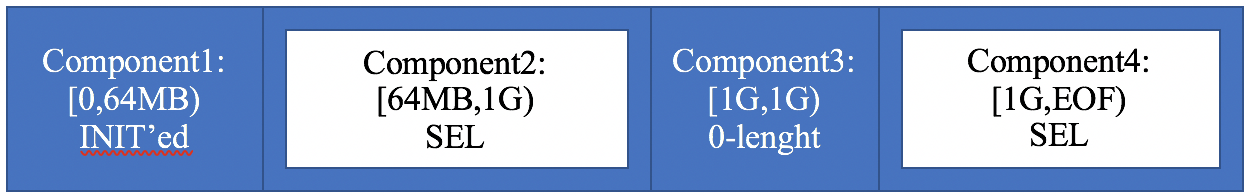

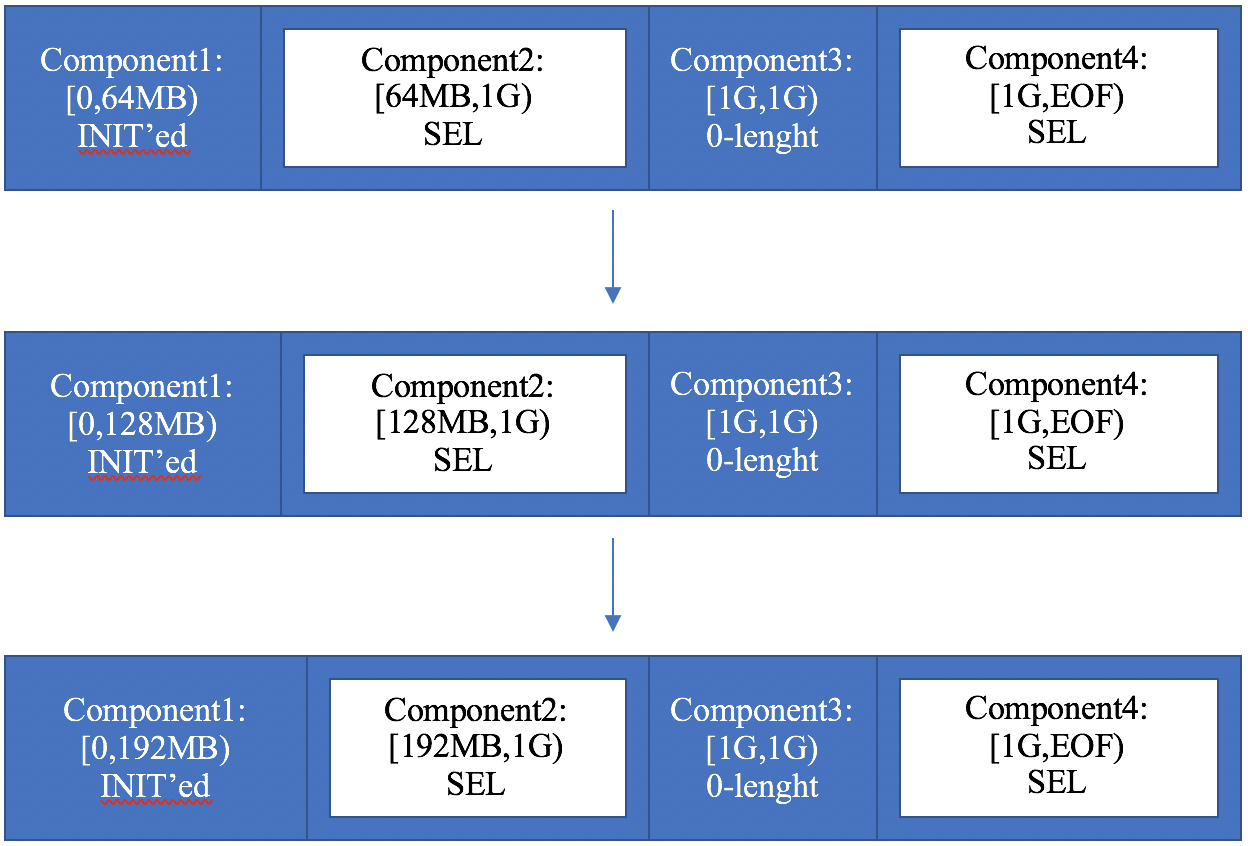

- 19.1. PFL object mapping diagram

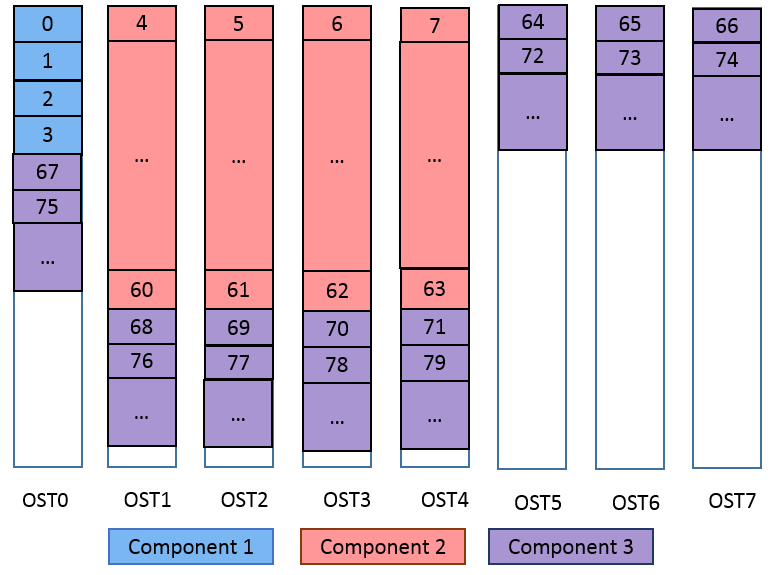

- 19.2. Example: create a composite file

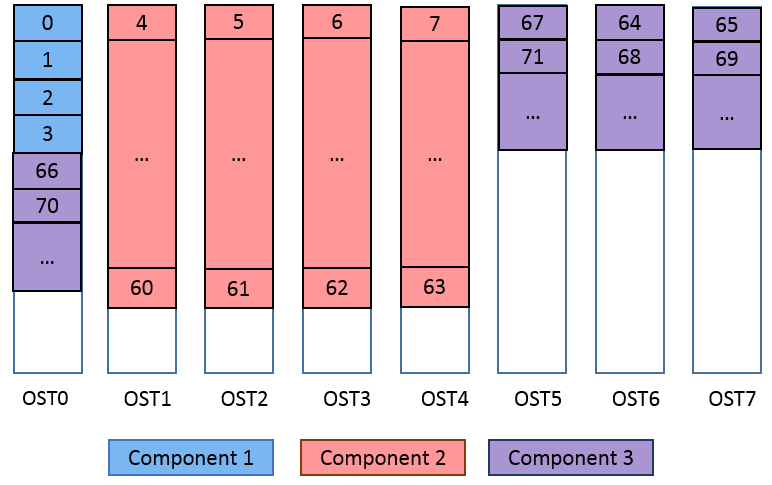

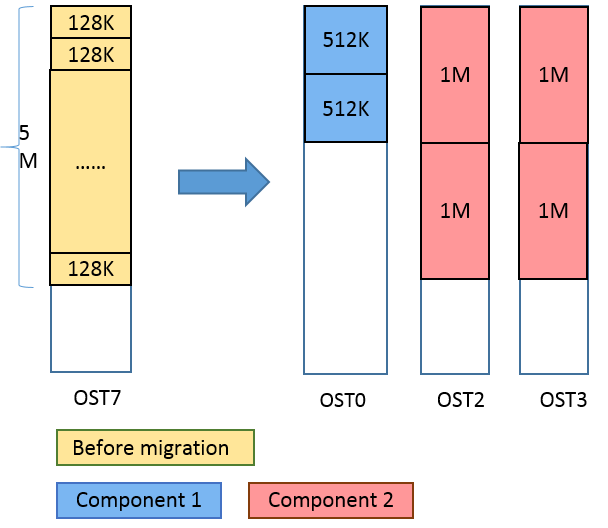

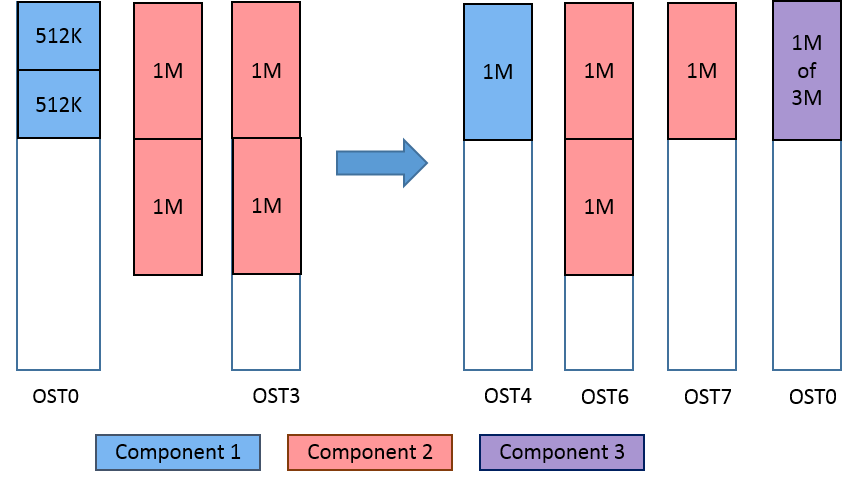

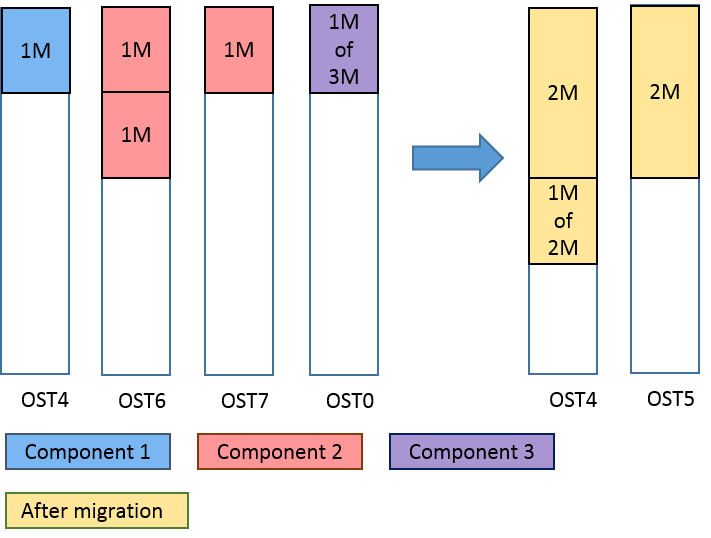

- 19.3. Example: add a component to an existing composite file

- 19.4. Example: delete a component from an existing file

- 19.5. Example: migrate normal to composite

- 19.6. Example: migrate composite to composite

- 19.7. Example: migrate composite to normal

- 19.8. Example: create a SEL file

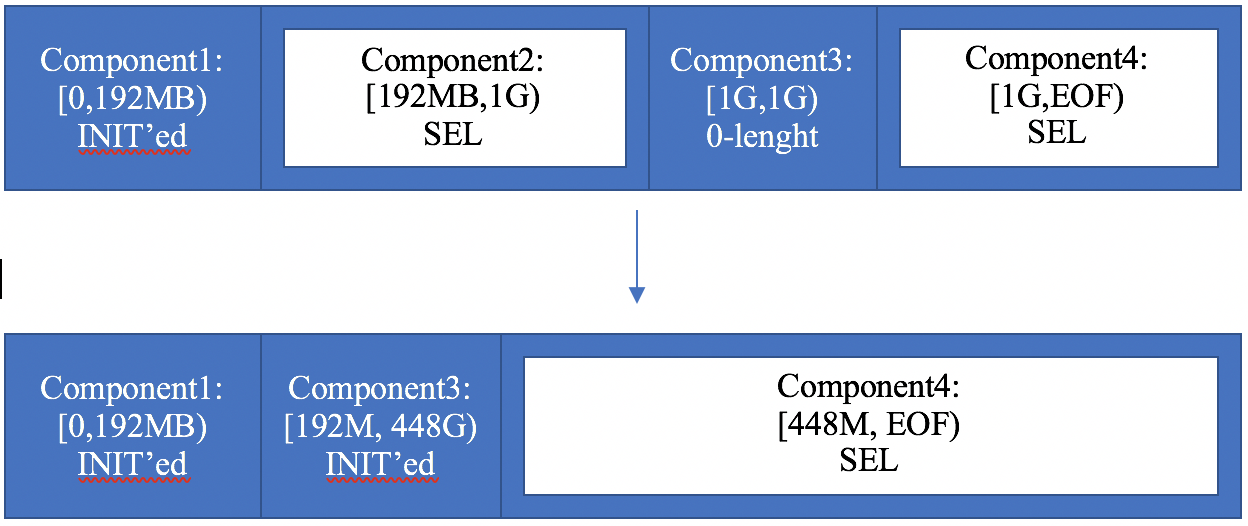

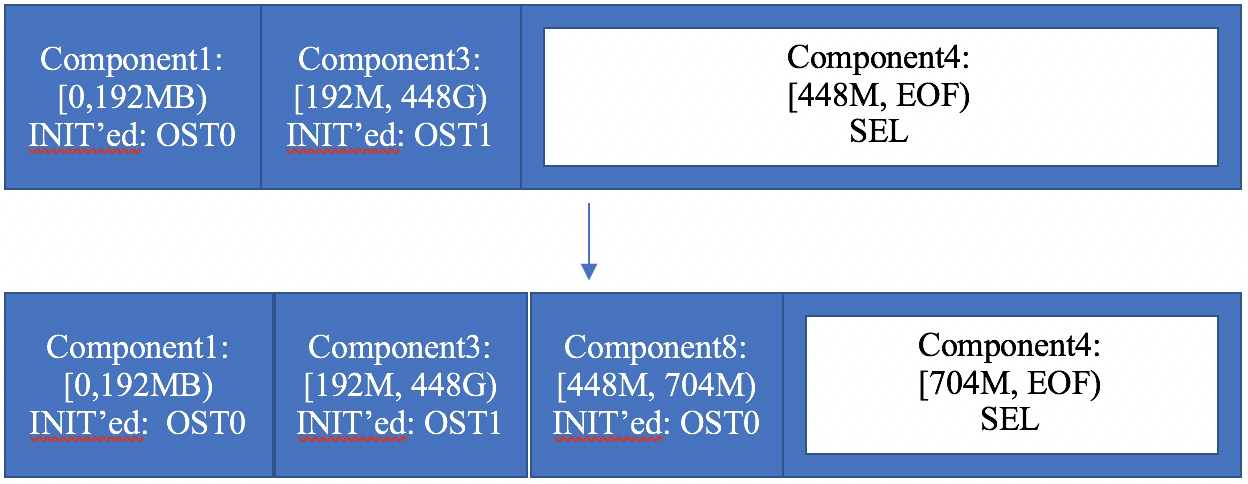

- 19.9. Example: an extension of a SEL file

- 19.10. Example: a spillover in a SEL file

- 19.11. Example: repeat a SEL component

- 19.12. Example: forced extension in a SEL file

- 19.13. LOV/LMV foreign format

- 19.14. Example: create a foreign file

- 20.1. Resulting file layout

- 22.1. FLR Delayed Write

- 26.1. Overview of the Lustre file system HSM

- 27.1. Overview of PCC-RW Architecture

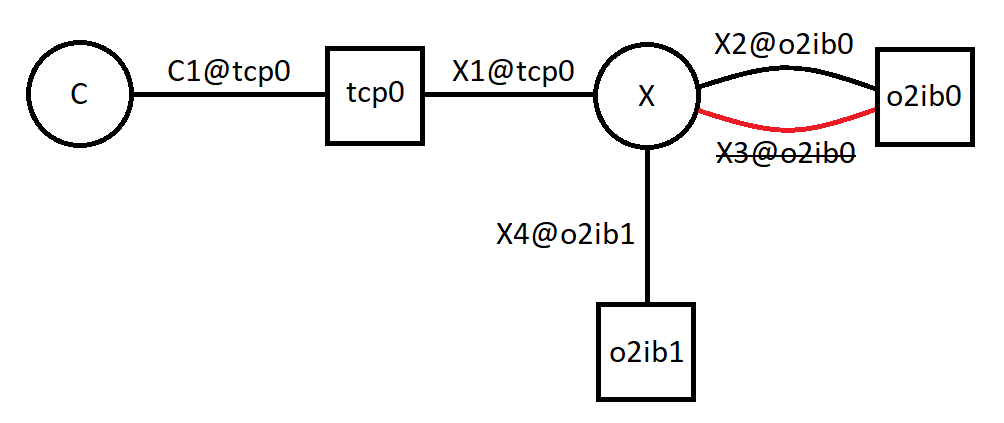

- 34.1. One of Two Connections to o2ib0 Down

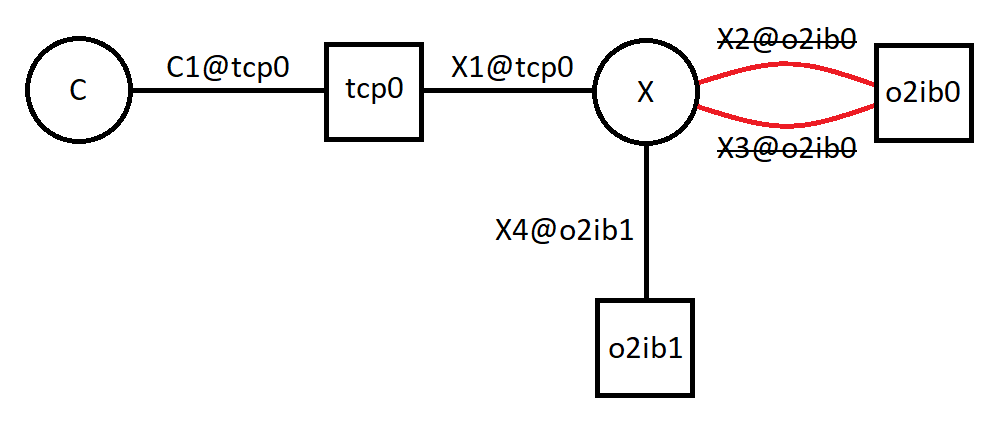

- 34.2. Both Connections to o2ib0 Down

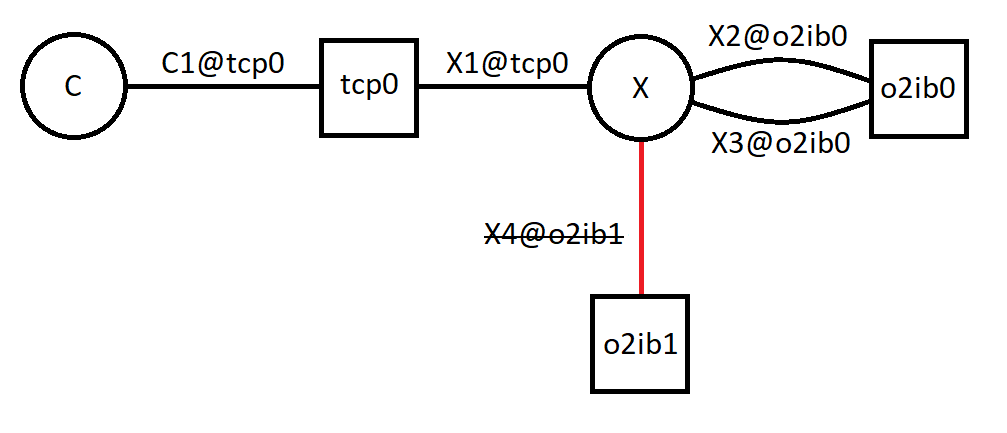

- 34.3. Connection to o2ib1 Down

- 34.4. Connection to o2ib1 Never Came Up

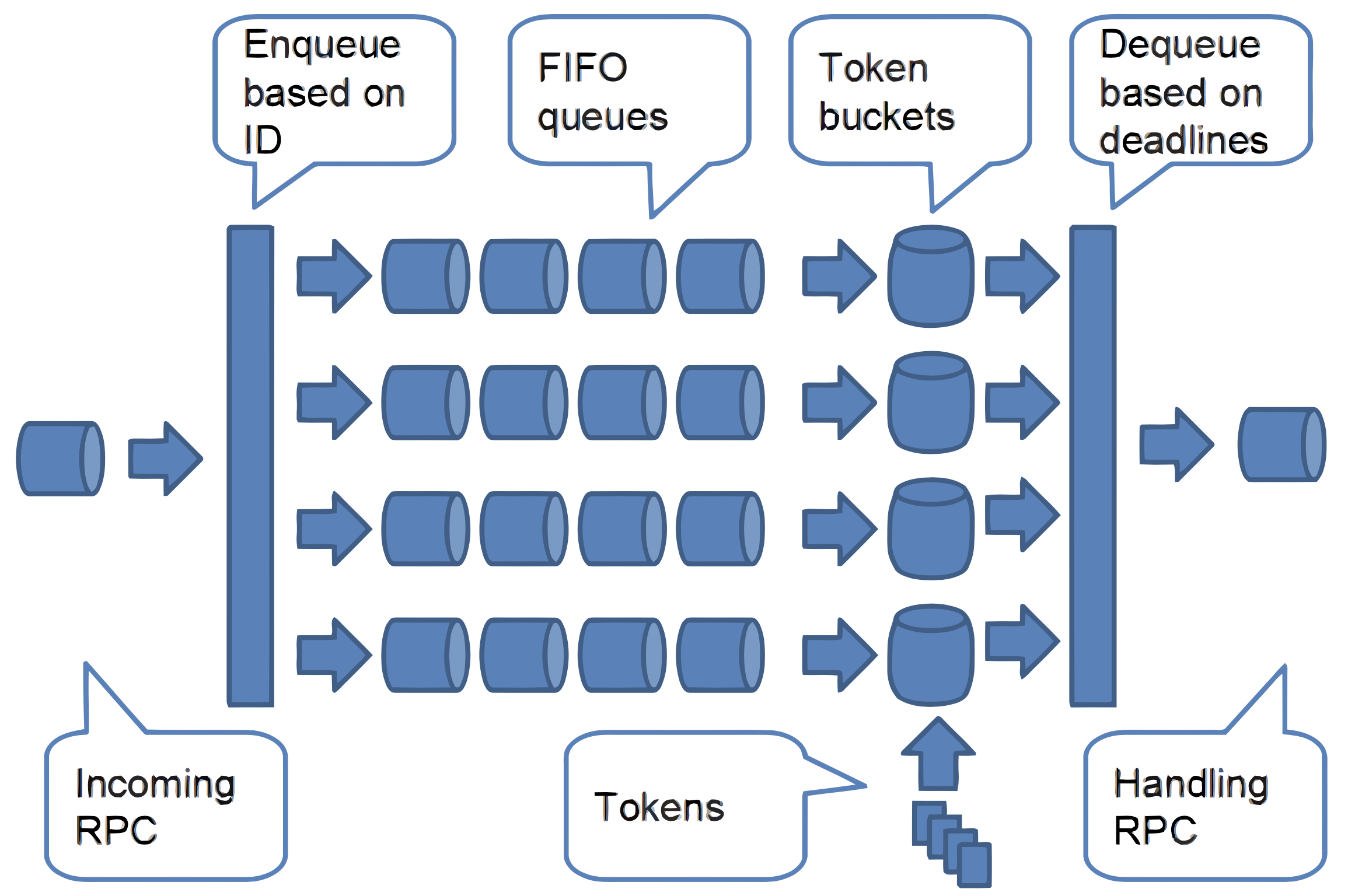

- 34.5. The internal structure of TBF policy

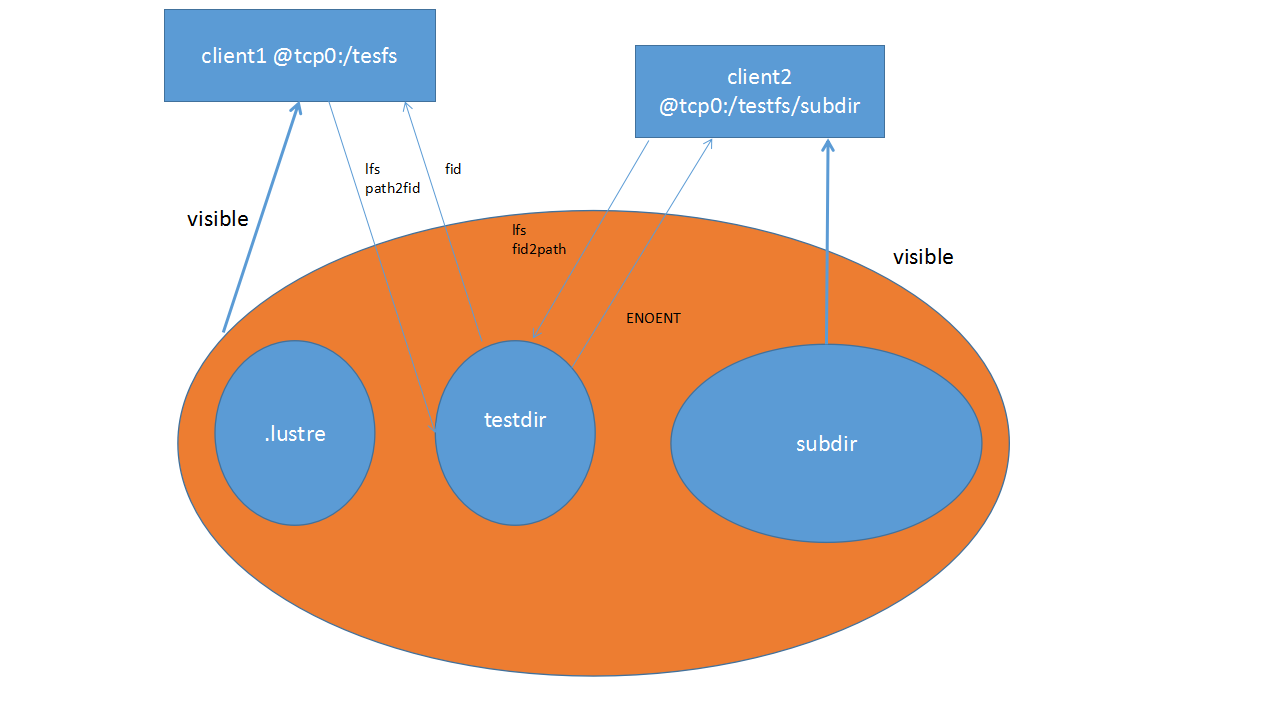

- 44.1. Lustre fileset

List of Tables

- 1.1. Lustre File System Scalability and Performance

- 1.2. Storage and hardware requirements for Lustre file system components

- 5.1. Default Inode Ratios Used for Newly Formatted OSTs

- 5.2. File and file system limits

- 8.1. Packages Installed on Lustre Servers

- 8.2. Packages Installed on Lustre Clients

- 8.3. Network Types Supported by Lustre LNDs

- 10.1. Default stripe pattern

- 16.1. Configuring Module Parameters

- 29.1. SSK Security Flavor Protections

- 29.2. lgss_sk Parameters

- 29.3. lsvcgssd Parameters

- 29.4. Key Descriptions

- 31.1. Write Barrier Status

List of Examples

- 34.1. lustre.conf

Table of Contents

The Lustre*Software Release 2.x Operations Manual provides detailed information and procedures to install, configure and tune a Lustre file system. The manual covers topics such as failover, quotas, striping, and bonding. This manual also contains troubleshooting information and tips to improve the operation and performance of a Lustre file system.

This document is maintained by Whamcloud in Docbook format. The canonical version is available at https://wiki.whamcloud.com/display/PUB/Documentation .

This document does not contain information about basic UNIX* operating system commands and procedures such as shutting down the system, booting the system, and configuring devices. Refer to the following for this information:

Software documentation that you received with your system

Red Hat* Enterprise Linux* documentation, which is at: https://docs.redhat.com/docs/en-US/index.html

Note

The Lustre client module is available for many different Linux* versions and distributions. The Red Hat Enterprise Linux distribution is the best supported and tested platform for Lustre servers.

The shell prompt used in the example text indicates whether a command can or should be executed by a regular user, or whether it requires superuser permission to run. Also, the machine type is often included in the prompt to indicate whether the command should be run on a client node, on an MDS node, an OSS node, or the MGS node.

Some examples are listed below, but other prompt combinations are also used as needed for the example.

|

Shell |

Prompt |

|---|---|

|

Regular user |

|

|

Superuser (root) |

|

|

Regular user on the client |

|

|

Superuser on the MDS |

|

|

Superuser on the OSS |

|

|

Superuser on the MGS |

|

|

Application |

Title |

Format |

Location |

|---|---|---|---|

|

Latest information |

Lustre Software Release 2.x Change Logs |

Wiki page |

Online at https://wiki.whamcloud.com/display/PUB/Documentation |

|

Service |

Lustre Software Release 2.x Operations Manual |

HTML |

Online at https://wiki.whamcloud.com/display/PUB/Documentation |

These web sites provide additional resources:

The Lustre* File System Release 2.x Operations Manual is a community maintained work. Versions of the manual are continually built as suggestions for changes and improvements arrive. Suggestions for improvements can be submitted through the ticketing system maintained at https://jira.whamcloud.com/browse/LUDOC. Instructions for providing a patch to the existing manual are available at: http://wiki.lustre.org/Lustre_Manual_Changes.

This manual covers a range of Lustre 2.x software releases, currently starting with the 2.5 release. Features specific to individual releases are identified within the table of contents using a shorthand notation (e.g. this paragraph is tagged as a Lustre 2.5 specific feature so that it will be updated when the 2.5-specific tagging is removed), and within the text using a distinct box.

Which version am I running?

The current version of Lustre that is in use on the node can be found

using the command lctl get_param version on any Lustre

client or server, for example:

$ lctl get_param version version=2.10.5

Only the latest revision of this document is made readily available because changes are continually arriving. The current and latest revision of this manual is available from links maintained at: http://lustre.opensfs.org/documentation/.

| Revision History | ||

|---|---|---|

| Revision 0 | Built on 28 April 2024 03:57:11Z | |

| Continuous build of Manual. | ||

Part I provides background information to help you understand the Lustre file system architecture and how the major components fit together. You will find information in this section about:

Table of Contents

This chapter describes the Lustre architecture and features of the Lustre file system. It includes the following sections:

The Lustre architecture is a storage architecture for clusters. The central component of the Lustre architecture is the Lustre file system, which is supported on the Linux operating system and provides a POSIX *standard-compliant UNIX file system interface.

The Lustre storage architecture is used for many different kinds of clusters. It is best known for powering many of the largest high-performance computing (HPC) clusters worldwide, with tens of thousands of client systems, petabytes (PiB) of storage and hundreds of gigabytes per second (GB/sec) of I/O throughput. Many HPC sites use a Lustre file system as a site-wide global file system, serving dozens of clusters.

The ability of a Lustre file system to scale capacity and performance for any need reduces the need to deploy many separate file systems, such as one for each compute cluster. Storage management is simplified by avoiding the need to copy data between compute clusters. In addition to aggregating storage capacity of many servers, the I/O throughput is also aggregated and scales with additional servers. Moreover, throughput and/or capacity can be easily increased by adding servers dynamically.

While a Lustre file system can function in many work environments, it is not necessarily the best choice for all applications. It is best suited for uses that exceed the capacity that a single server can provide, though in some use cases, a Lustre file system can perform better with a single server than other file systems due to its strong locking and data coherency.

A Lustre file system is currently not particularly well suited for "peer-to-peer" usage models where clients and servers are running on the same node, each sharing a small amount of storage, due to the lack of data replication at the Lustre software level. In such uses, if one client/server fails, then the data stored on that node will not be accessible until the node is restarted.

Lustre file systems run on a variety of vendor's kernels. For more details, see the Lustre Test Matrix Section 8.1, “ Preparing to Install the Lustre Software”.

A Lustre installation can be scaled up or down with respect to the number of client nodes, disk storage and bandwidth. Scalability and performance are dependent on available disk and network bandwidth and the processing power of the servers in the system. A Lustre file system can be deployed in a wide variety of configurations that can be scaled well beyond the size and performance observed in production systems to date.

Table 1.1, “Lustre File System Scalability and Performance” shows some of the scalability and performance characteristics of a Lustre file system. For a full list of Lustre file and filesystem limits see Table 5.2, “File and file system limits”.

Table 1.1. Lustre File System Scalability and Performance

|

Feature |

Current Practical Range |

Known Production Usage |

|---|---|---|

|

Client Scalability |

100-100000 |

50000+ clients, many in the 10000 to 20000 range |

|

Client Performance |

Single client: I/O 90% of network bandwidth Aggregate: 50 TB/sec I/O, 50M IOPS |

Single client: 15 GB/sec I/O (HDR IB), 50000 IOPS Aggregate: 10 TB/sec I/O, 10M IOPS |

|

OSS Scalability |

Single OSS: 1-32 OSTs per OSS Single OST: 500M objects, 1024TiB per OST OSS count: 1000 OSSs, 4000 OSTs |

Single OSS: 4 OSTs per OSS Single OST: 1024TiB OSTs OSS count: 450 OSSs with 900 750TiB HDD OSTs + 450 25TiB NVMe OSTs 1024 OSSs with 1024 72TiB OSTs |

|

OSS Performance |

Single OSS: 15 GB/sec, 1.5M IOPS Aggregate: 50 TB/sec, 50M IOPS |

Single OSS: 10 GB/sec, 1.5M IOPS Aggregate: 20 TB/sec, 20M IOPS |

|

MDS Scalability |

Single MDS: 1-4 MDTs per MDS Single MDT: 4 billion files, 16TiB per MDT (ldiskfs) 64 billion files, 64TiB per MDT (ZFS) MDS count: 256 MDSs, up to 256 MDTs |

Single MDS: 4 billion files MDS count: 40 MDS with 40 4TiB MDTs in production 256 MDS with 256 64GiB MDTs in testing |

|

MDS Performance |

1M/s create operations 2M/s stat operations |

100k/s create operations, 200k/s metadata stat operations |

|

File system Scalability |

Single File: 32 PiB max file size (ldiskfs) 2^63 bytes (ZFS) Aggregate: 512 PiB space, 1 trillion files |

Single File: multi-TiB max file size Aggregate: 700 PiB space, 25 billion files |

Other Lustre software features are:

Performance-enhanced ext4 file system:The Lustre file system uses an improved version of the ext4 journaling file system to store data and metadata. This version, called

ldiskfs, has been enhanced to improve performance and provide additional functionality needed by the Lustre file system.It is also possible to use ZFS as the backing filesystem for Lustre for the MDT, OST, and MGS storage. This allows Lustre to leverage the scalability and data integrity features of ZFS for individual storage targets.

POSIX standard compliance:The full POSIX test suite passes in an identical manner to a local ext4 file system, with limited exceptions on Lustre clients. In a cluster, most operations are atomic so that clients never see stale data or metadata. The Lustre software supports mmap() file I/O.

High-performance heterogeneous networking:The Lustre software supports a variety of high performance, low latency networks and permits Remote Direct Memory Access (RDMA) for InfiniBand *(utilizing OpenFabrics Enterprise Distribution (OFED*), Intel OmniPath®, and other advanced networks for fast and efficient network transport. Multiple RDMA networks can be bridged using Lustre routing for maximum performance. The Lustre software also includes integrated network diagnostics.

High-availability:The Lustre file system supports active/active failover using shared storage partitions for OSS targets (OSTs), and for MDS targets (MDTs). The Lustre file system can work with a variety of high availability (HA) managers to allow automated failover and has no single point of failure (NSPF). This allows application transparent recovery. Multiple mount protection (MMP) provides integrated protection from errors in highly-available systems that would otherwise cause file system corruption.

Security:By default TCP connections are only allowed from privileged ports. UNIX group membership is verified on the MDS.

Access control list (ACL), extended attributes:the Lustre security model follows that of a UNIX file system, enhanced with POSIX ACLs. Noteworthy additional features include root squash.

Interoperability:The Lustre file system runs on a variety of CPU architectures and mixed-endian clusters and is interoperable between successive major Lustre software releases.

Object-based architecture:Clients are isolated from the on-disk file structure enabling upgrading of the storage architecture without affecting the client.

Byte-granular file and fine-grained metadata locking:Many clients can read and modify the same file or directory concurrently. The Lustre distributed lock manager (LDLM) ensures that files are coherent between all clients and servers in the file system. The MDT LDLM manages locks on inode permissions and pathnames. Each OST has its own LDLM for locks on file stripes stored thereon, which scales the locking performance as the file system grows.

Quotas:User and group quotas are available for a Lustre file system.

Capacity growth:The size of a Lustre file system and aggregate cluster bandwidth can be increased without interruption by adding new OSTs and MDTs to the cluster.

Controlled file layout:The layout of files across OSTs can be configured on a per file, per directory, or per file system basis. This allows file I/O to be tuned to specific application requirements within a single file system. The Lustre file system uses RAID-0 striping and balances space usage across OSTs.

Network data integrity protection:A checksum of all data sent from the client to the OSS protects against corruption during data transfer.

MPI I/O:The Lustre architecture has a dedicated MPI ADIO layer that optimizes parallel I/O to match the underlying file system architecture.

NFS and CIFS export:Lustre files can be re-exported using NFS (via Linux knfsd or Ganesha) or CIFS (via Samba), enabling them to be shared with non-Linux clients such as Microsoft*Windows, *Apple *Mac OS X *, and others.

Disaster recovery tool:The Lustre file system provides an online distributed file system check (LFSCK) that can restore consistency between storage components in case of a major file system error. A Lustre file system can operate even in the presence of file system inconsistencies, and LFSCK can run while the filesystem is in use, so LFSCK is not required to complete before returning the file system to production.

Performance monitoring:The Lustre file system offers a variety of mechanisms to examine performance and tuning.

Open source:The Lustre software is licensed under the GPL 2.0 license for use with the Linux operating system.

An installation of the Lustre software includes a management server (MGS) and one or more Lustre file systems interconnected with Lustre networking (LNet).

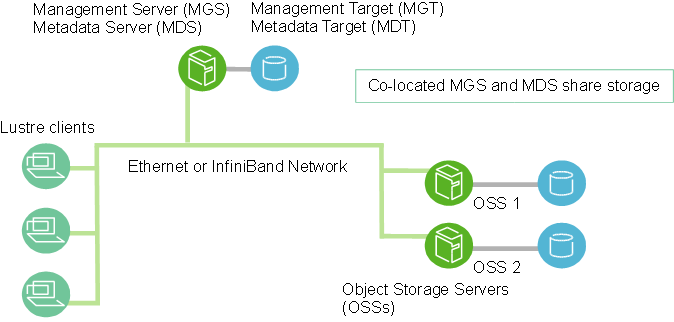

A basic configuration of Lustre file system components is shown in Figure 1.1, “Lustre file system components in a basic cluster”.

The MGS stores configuration information for all the Lustre file systems in a cluster and provides this information to other Lustre components. Each Lustre target contacts the MGS to provide information, and Lustre clients contact the MGS to retrieve information.

It is preferable that the MGS have its own storage space so that it can be managed independently. However, the MGS can be co-located and share storage space with an MDS as shown in Figure 1.1, “Lustre file system components in a basic cluster”.

Each Lustre file system consists of the following components:

Metadata Servers (MDS)- The MDS makes metadata stored in one or more MDTs available to Lustre clients. Each MDS manages the names and directories in the Lustre file system(s) and provides network request handling for one or more local MDTs.

Metadata Targets (MDT) - Each filesystem has at least one MDT, which holds the root directory. The MDT stores metadata (such as filenames, directories, permissions and file layout) on storage attached to an MDS. Each file system has one MDT. An MDT on a shared storage target can be available to multiple MDSs, although only one can access it at a time. If an active MDS fails, a second MDS node can serve the MDT and make it available to clients. This is referred to as MDS failover.

Multiple MDTs are supported with the Distributed Namespace Environment (Distributed Namespace Environment (DNE)). In addition to the primary MDT that holds the filesystem root, it is possible to add additional MDS nodes, each with their own MDTs, to hold sub-directory trees of the filesystem.

Introduced in Lustre 2.8Since Lustre software release 2.8, DNE also allows the filesystem to distribute files of a single directory over multiple MDT nodes. A directory which is distributed across multiple MDTs is known as a Striped Directory.

Object Storage Servers (OSS): The OSS provides file I/O service and network request handling for one or more local OSTs. Typically, an OSS serves between two and eight OSTs, up to 16 TiB each. A typical configuration is an MDT on a dedicated node, two or more OSTs on each OSS node, and a client on each of a large number of compute nodes.

Object Storage Target (OST): User file data is stored in one or more objects, each object on a separate OST in a Lustre file system. The number of objects per file is configurable by the user and can be tuned to optimize performance for a given workload.

Lustre clients: Lustre clients are computational, visualization or desktop nodes that are running Lustre client software, allowing them to mount the Lustre file system.

The Lustre client software provides an interface between the Linux virtual file system and the Lustre servers. The client software includes a management client (MGC), a metadata client (MDC), and multiple object storage clients (OSCs), one corresponding to each OST in the file system.

A logical object volume (LOV) aggregates the OSCs to provide transparent access across all the OSTs. Thus, a client with the Lustre file system mounted sees a single, coherent, synchronized namespace. Several clients can write to different parts of the same file simultaneously, while, at the same time, other clients can read from the file.

A logical metadata volume (LMV) aggregates the MDCs to provide transparent access across all the MDTs in a similar manner as the LOV does for file access. This allows the client to see the directory tree on multiple MDTs as a single coherent namespace, and striped directories are merged on the clients to form a single visible directory to users and applications.

Table 1.2, “ Storage and hardware requirements for Lustre file system components”provides the requirements for attached storage for each Lustre file system component and describes desirable characteristics of the hardware used.

Table 1.2. Storage and hardware requirements for Lustre file system components

|

|

Required attached storage |

Desirable hardware characteristics |

|---|---|---|

|

MDSs |

1-2% of file system capacity |

Adequate CPU power, plenty of memory, fast disk storage. |

|

OSSs |

1-128 TiB per OST, 1-8 OSTs per OSS |

Good bus bandwidth. Recommended that storage be balanced evenly across OSSs and matched to network bandwidth. |

|

Clients |

No local storage needed |

Low latency, high bandwidth network. |

For additional hardware requirements and considerations, see Chapter 5, Determining Hardware Configuration Requirements and Formatting Options.

Lustre Networking (LNet) is a custom networking API that provides the communication infrastructure that handles metadata and file I/O data for the Lustre file system servers and clients. For more information about LNet, see Chapter 2, Understanding Lustre Networking (LNet).

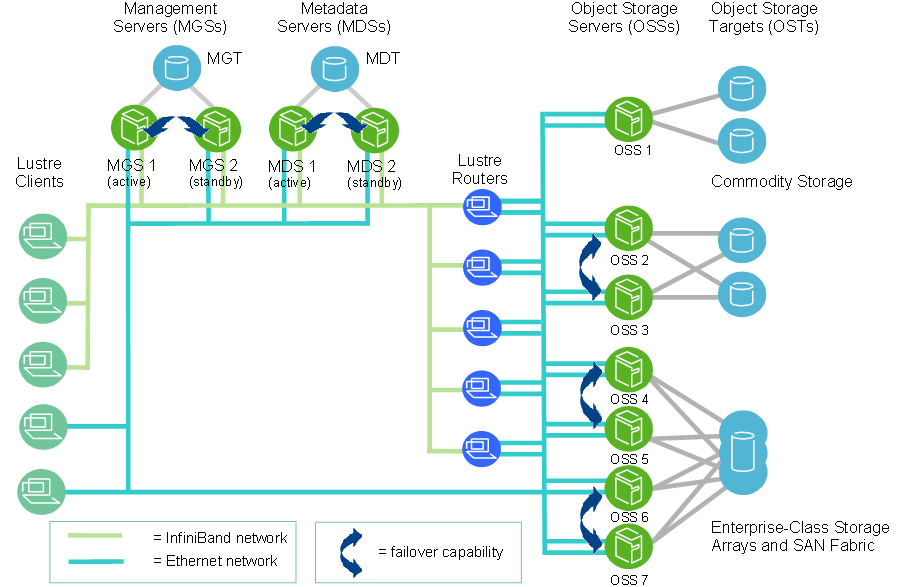

At scale, a Lustre file system cluster can include hundreds of OSSs and thousands of clients (see Figure 1.2, “ Lustre cluster at scale”). More than one type of network can be used in a Lustre cluster. Shared storage between OSSs enables failover capability. For more details about OSS failover, see Chapter 3, Understanding Failover in a Lustre File System.

Lustre File IDentifiers (FIDs) are used internally for identifying files or objects, similar to inode numbers in local filesystems. A FID is a 128-bit identifier, which contains a unique 64-bit sequence number (SEQ), a 32-bit object ID (OID), and a 32-bit version number. The sequence number is unique across all Lustre targets in a file system (OSTs and MDTs). This allows multiple MDTs and OSTs to uniquely identify objects without depending on identifiers in the underlying filesystem (e.g. inode numbers) that are likely to be duplicated between targets. The FID SEQ number also allows mapping a FID to a particular MDT or OST.

The LFSCK file system consistency checking tool provides functionality that enables FID-in-dirent for existing files. It includes the following functionality:

Verifies the FID stored with each directory entry and regenerates it from the inode if it is invalid or missing.

Verifies the linkEA entry for each inode and regenerates it if invalid or missing. The linkEA stores the file name and parent FID. It is stored as an extended attribute in each inode. Thus, the linkEA can be used to reconstruct the full path name of a file from only the FID.

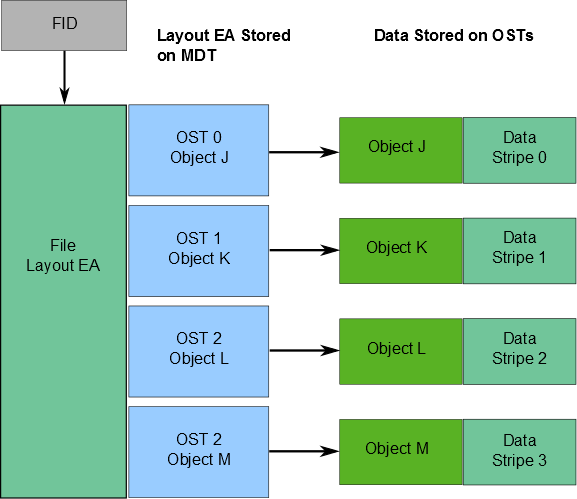

Information about where file data is located on the OST(s) is stored as an extended attribute called layout EA in an MDT object identified by the FID for the file (see Figure 1.3, “Layout EA on MDT pointing to file data on OSTs”). If the file is a regular file (not a directory or symbol link), the MDT object points to 1-to-N OST object(s) on the OST(s) that contain the file data. If the MDT file points to one object, all the file data is stored in that object. If the MDT file points to more than one object, the file data is striped across the objects using RAID 0, and each object is stored on a different OST. (For more information about how striping is implemented in a Lustre file system, see Section 1.3.1, “ Lustre File System and Striping”.

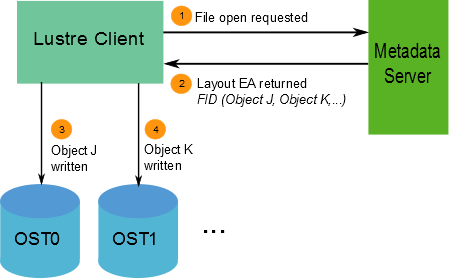

When a client wants to read from or write to a file, it first fetches the layout EA from the MDT object for the file. The client then uses this information to perform I/O on the file, directly interacting with the OSS nodes where the objects are stored. This process is illustrated in Figure 1.4, “Lustre client requesting file data” .

The available bandwidth of a Lustre file system is determined as follows:

The network bandwidth equals the aggregated bandwidth of the OSSs to the targets.

The disk bandwidth equals the sum of the disk bandwidths of the storage targets (OSTs) up to the limit of the network bandwidth.

The aggregate bandwidth equals the minimum of the disk bandwidth and the network bandwidth.

The available file system space equals the sum of the available space of all the OSTs.

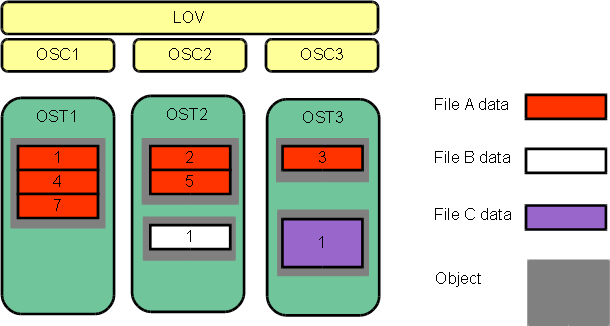

One of the main factors leading to the high performance of Lustre file systems is the ability to stripe data across multiple OSTs in a round-robin fashion. Users can optionally configure for each file the number of stripes, stripe size, and OSTs that are used.

Striping can be used to improve performance when the aggregate bandwidth to a single file exceeds the bandwidth of a single OST. The ability to stripe is also useful when a single OST does not have enough free space to hold an entire file. For more information about benefits and drawbacks of file striping, see Section 19.2, “ Lustre File Layout (Striping) Considerations”.

Striping allows segments or 'chunks' of data in a file to be stored

on different OSTs, as shown in

Figure 1.5, “File striping on a

Lustre file system”. In the Lustre file

system, a RAID 0 pattern is used in which data is "striped" across a

certain number of objects. The number of objects in a single file is

called the

stripe_count.

Each object contains a chunk of data from the file. When the chunk

of data being written to a particular object exceeds the

stripe_size, the next chunk of data in the file is

stored on the next object.

Default values for

stripe_count and

stripe_size are set for the file system. The default

value for

stripe_count is 1 stripe for file and the default value

for

stripe_size is 1MB. The user may change these values on

a per directory or per file basis. For more details, see

Section 19.3, “Setting the File Layout/Striping Configuration (lfs

setstripe)”.

Figure 1.5, “File striping on a

Lustre file system”, the

stripe_size for File C is larger than the

stripe_size for File A, allowing more data to be stored

in a single stripe for File C. The

stripe_count for File A is 3, resulting in data striped

across three objects, while the

stripe_count for File B and File C is 1.

No space is reserved on the OST for unwritten data. File A in Figure 1.5, “File striping on a Lustre file system”.

The maximum file size is not limited by the size of a single target. In a Lustre file system, files can be striped across multiple objects (up to 2000), and each object can be up to 16 TiB in size with ldiskfs, or up to 256PiB with ZFS. This leads to a maximum file size of 31.25 PiB for ldiskfs or 8EiB with ZFS. Note that a Lustre file system can support files up to 2^63 bytes (8EiB), limited only by the space available on the OSTs.

Note

ldiskfs filesystems without the ea_inode

feature limit the maximum stripe count for a single file to 160 OSTs.

Although a single file can only be striped over 2000 objects, Lustre file systems can have thousands of OSTs. The I/O bandwidth to access a single file is the aggregated I/O bandwidth to the objects in a file, which can be as much as a bandwidth of up to 2000 servers. On systems with more than 2000 OSTs, clients can do I/O using multiple files to utilize the full file system bandwidth.

For more information about striping, see Chapter 19, Managing File Layout (Striping) and Free Space.

Extended Attributes(xattrs)

Lustre uses lov_user_md_v1/lov_user_md_v3 data-structures to

maintain its file striping information under xattrs. Extended

attributes are created when files and directory are created. Lustre

uses trusted extended attributes to store its

parameters which are root-only accessible. The parameters are:

trusted.lov: Holds layout for a regular file, or default file layout stored on a directory (also accessible aslustre.lovfor non-root users).trusted.lma: Holds FID and extra state flags for current filetrusted.lmv: Holds layout for a striped directory (DNE 2), not present otherwisetrusted.link: Holds parent directory FID + filename for each link to a file (forlfs fid2path)

xattr which are stored and present in the file could be verify using:

# getfattr -d -m - /mnt/testfs/file>

Table of Contents

This chapter introduces Lustre networking (LNet). It includes the following sections:

In a cluster using one or more Lustre file systems, the network communication infrastructure required by the Lustre file system is implemented using the Lustre networking (LNet) feature.

LNet supports many commonly-used network types, such as InfiniBand and IP networks, and allows simultaneous availability across multiple network types with routing between them. Remote direct memory access (RDMA) is permitted when supported by underlying networks using the appropriate Lustre network driver (LND). High availability and recovery features enable transparent recovery in conjunction with failover servers.

An LND is a pluggable driver that provides support for a particular network type, for

example ksocklnd is the driver which implements the TCP Socket LND that

supports TCP networks. LNDs are loaded into the driver stack, with one LND for each network

type in use.

For information about configuring LNet, see Chapter 9, Configuring Lustre Networking (LNet).

For information about administering LNet, see Part III, “Administering Lustre”.

Key features of LNet include:

RDMA, when supported by underlying networks

Support for many commonly-used network types

High availability and recovery

Support of multiple network types simultaneously

Routing among disparate networks

LNet permits end-to-end read/write throughput at or near peak bandwidth rates on a variety of network interconnects.

A Lustre network is comprised of clients and servers running the Lustre software. It need not be confined to one LNet subnet but can span several networks provided routing is possible between the networks. In a similar manner, a single network can have multiple LNet subnets.

The Lustre networking stack is comprised of two layers, the LNet code module and the LND. The LNet layer operates above the LND layer in a manner similar to the way the network layer operates above the data link layer. LNet layer is connectionless, asynchronous and does not verify that data has been transmitted while the LND layer is connection oriented and typically does verify data transmission.

LNets are uniquely identified by a label comprised of a string corresponding to an LND and

a number, such as tcp0, o2ib0, or o2ib1, that uniquely identifies each LNet. Each node on an

LNet has at least one network identifier (NID). A NID is a combination of the address of the

network interface and the LNet label in the

form:address@LNet_label

Examples:

192.168.1.2@tcp0 10.13.24.90@o2ib1

In certain circumstances it might be desirable for Lustre file system traffic to pass between multiple LNets. This is possible using LNet routing. It is important to realize that LNet routing is not the same as network routing. For more details about LNet routing, see Chapter 9, Configuring Lustre Networking (LNet)

Table of Contents

This chapter describes failover in a Lustre file system. It includes:

In a high-availability (HA) system, unscheduled downtime is minimized by using redundant hardware and software components and software components that automate recovery when a failure occurs. If a failure condition occurs, such as the loss of a server or storage device or a network or software fault, the system's services continue with minimal interruption. Generally, availability is specified as the percentage of time the system is required to be available.

Availability is accomplished by replicating hardware and/or software so that when a primary server fails or is unavailable, a standby server can be switched into its place to run applications and associated resources. This process, called failover, is automatic in an HA system and, in most cases, completely application-transparent.

A failover hardware setup requires a pair of servers with a shared resource (typically a physical storage device, which may be based on SAN, NAS, hardware RAID, SCSI or Fibre Channel (FC) technology). The method of sharing storage should be essentially transparent at the device level; the same physical logical unit number (LUN) should be visible from both servers. To ensure high availability at the physical storage level, we encourage the use of RAID arrays to protect against drive-level failures.

Note

The Lustre software does not provide redundancy for data; it depends exclusively on redundancy of backing storage devices. The backing OST storage should be RAID 5 or, preferably, RAID 6 storage. MDT storage should be RAID 1 or RAID 10.

To establish a highly-available Lustre file system, power management software or hardware and high availability (HA) software are used to provide the following failover capabilities:

Resource fencing- Protects physical storage from simultaneous access by two nodes.

Resource management- Starts and stops the Lustre resources as a part of failover, maintains the cluster state, and carries out other resource management tasks.

Health monitoring- Verifies the availability of hardware and network resources and responds to health indications provided by the Lustre software.

These capabilities can be provided by a variety of software and/or hardware solutions. For more information about using power management software or hardware and high availability (HA) software with a Lustre file system, see Chapter 11, Configuring Failover in a Lustre File System.

HA software is responsible for detecting failure of the primary Lustre server node and controlling the failover.The Lustre software works with any HA software that includes resource (I/O) fencing. For proper resource fencing, the HA software must be able to completely power off the failed server or disconnect it from the shared storage device. If two active nodes have access to the same storage device, data may be severely corrupted.

Nodes in a cluster can be configured for failover in several ways. They are often configured in pairs (for example, two OSTs attached to a shared storage device), but other failover configurations are also possible. Failover configurations include:

Active/passive pair - In this configuration, the active node provides resources and serves data, while the passive node is usually standing by idle. If the active node fails, the passive node takes over and becomes active.

Active/active pair - In this configuration, both nodes are active, each providing a subset of resources. In case of a failure, the second node takes over resources from the failed node.

If there is a single MDT in a filesystem, two MDSes can be configured as an active/passive pair, while pairs of OSSes can be deployed in an active/active configuration that improves OST availability without extra overhead. Often the standby MDS is the active MDS for another Lustre file system or the MGS, so no nodes are idle in the cluster. If there are multiple MDTs in a filesystem, active-active failover configurations are available for MDSs that serve MDTs on shared storage.

The failover functionality provided by the Lustre software can be used for the following failover scenario. When a client attempts to do I/O to a failed Lustre target, it continues to try until it receives an answer from any of the configured failover nodes for the Lustre target. A user-space application does not detect anything unusual, except that the I/O may take longer to complete.

Failover in a Lustre file system requires that two nodes be configured as a failover pair, which must share one or more storage devices. A Lustre file system can be configured to provide MDT or OST failover.

For MDT failover, two MDSs can be configured to serve the same MDT. Only one MDS node can serve any MDT at one time. By placing two or more MDT devices on storage shared by two MDSs, one MDS can fail and the remaining MDS can begin serving the unserved MDT. This is described as an active/active failover pair.

For OST failover, multiple OSS nodes can be configured to be able to serve the same OST. However, only one OSS node can serve the OST at a time. An OST can be moved between OSS nodes that have access to the same storage device using

umount/mountcommands.

The

--servicenode option is used to set up nodes in a Lustre

file system for failover at creation time (using

mkfs.lustre) or later when the Lustre file system is

active (using

tunefs.lustre). For explanations of these utilities, see

Section 44.12, “

mkfs.lustre”and

Section 44.15, “

tunefs.lustre”.

Failover capability in a Lustre file system can be used to upgrade the Lustre software between successive minor versions without cluster downtime. For more information, see Chapter 17, Upgrading a Lustre File System.

For information about configuring failover, see Chapter 11, Configuring Failover in a Lustre File System.

Note

The Lustre software provides failover functionality only at the file system level. In a complete failover solution, failover functionality for system-level components, such as node failure detection or power control, must be provided by a third-party tool.

Caution

OST failover functionality does not protect against corruption caused by a disk failure. If the storage media (i.e., physical disk) used for an OST fails, it cannot be recovered by functionality provided in the Lustre software. We strongly recommend that some form of RAID be used for OSTs. Lustre functionality assumes that the storage is reliable, so it adds no extra reliability features.

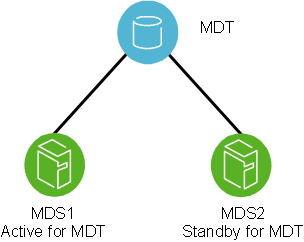

Two MDSs are typically configured as an active/passive failover pair as shown in Figure 3.1, “Lustre failover configuration for a active/passive MDT”. Note that both nodes must have access to shared storage for the MDT(s) and the MGS. The primary (active) MDS manages the Lustre system metadata resources. If the primary MDS fails, the secondary (passive) MDS takes over these resources and serves the MDTs and the MGS.

Note

In an environment with multiple file systems, the MDSs can be configured in a quasi active/active configuration, with each MDS managing metadata for a subset of the Lustre file system.

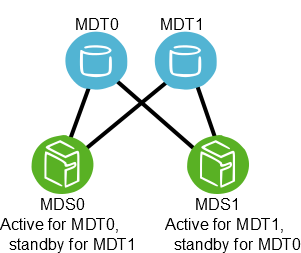

MDTs can be configured as an active/active failover configuration. A failover cluster is built from two MDSs as shown in Figure 3.2, “Lustre failover configuration for a active/active MDTs”.

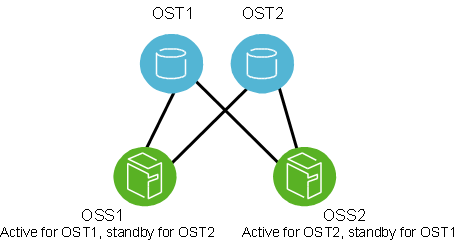

OSTs are usually configured in a load-balanced, active/active failover configuration. A failover cluster is built from two OSSs as shown in Figure 3.3, “Lustre failover configuration for an OSTs”.

Note

OSSs configured as a failover pair must have shared disks/RAID.

In an active configuration, 50% of the available OSTs are assigned to one OSS and the remaining OSTs are assigned to the other OSS. Each OSS serves as the primary node for half the OSTs and as a failover node for the remaining OSTs.

In this mode, if one OSS fails, the other OSS takes over all of the failed OSTs. The clients attempt to connect to each OSS serving the OST, until one of them responds. Data on the OST is written synchronously, and the clients replay transactions that were in progress and uncommitted to disk before the OST failure.

For more information about configuring failover, see Chapter 11, Configuring Failover in a Lustre File System.

Part II describes how to install and configure a Lustre file system. You will find information in this section about:

Table of Contents

- 4. Installation Overview

- 5. Determining Hardware Configuration Requirements and Formatting Options

- 6. Configuring Storage on a Lustre File System

- 7. Setting Up Network Interface Bonding

- 8. Installing the Lustre Software

- 9. Configuring Lustre Networking (LNet)

- 9.1. Configuring LNet via

lnetctlL 2.7 - 9.1.1. Configuring LNet

- 9.1.2. Displaying Global Settings

- 9.1.3. Adding, Deleting and Showing Networks

- 9.1.4. Manual Adding, Deleting and Showing PeersL 2.10

- 9.1.5. Dynamic Peer DiscoveryL 2.11

- 9.1.6. Adding, Deleting and Showing routes

- 9.1.7. Enabling and Disabling Routing

- 9.1.8. Showing routing information

- 9.1.9. Configuring Routing Buffers

- 9.1.10. Asymmetrical RoutesL 2.13

- 9.1.11. Importing YAML Configuration File

- 9.1.12. Exporting Configuration in YAML format

- 9.1.13. Showing LNet Traffic Statistics

- 9.1.14. YAML Syntax

- 9.2. Overview of LNet Module Parameters

- 9.3. Setting the LNet Module networks Parameter

- 9.4. Setting the LNet Module ip2nets Parameter

- 9.5. Setting the LNet Module routes Parameter

- 9.6. Testing the LNet Configuration

- 9.7. Configuring the Router Checker

- 9.8. Best Practices for LNet Options

- 9.1. Configuring LNet via

- 10. Configuring a Lustre File System

- 11. Configuring Failover in a Lustre File System

Table of Contents

This chapter provides on overview of the procedures required to set up, install and configure a Lustre file system.

Note

If the Lustre file system is new to you, you may find it helpful to refer to Part I, “Introducing the Lustre* File System” for a description of the Lustre architecture, file system components and terminology before proceeding with the installation procedure.

To set up Lustre file system hardware and install and configure the Lustre software, refer the the chapters below in the order listed:

(Required) Set up your Lustre file system hardware.

See Chapter 5, Determining Hardware Configuration Requirements and Formatting Options - Provides guidelines for configuring hardware for a Lustre file system including storage, memory, and networking requirements.

(Optional - Highly Recommended) Configure storage on Lustre storage devices.

See Chapter 6, Configuring Storage on a Lustre File System - Provides instructions for setting up hardware RAID on Lustre storage devices.

(Optional) Set up network interface bonding.

See Chapter 7, Setting Up Network Interface Bonding - Describes setting up network interface bonding to allow multiple network interfaces to be used in parallel to increase bandwidth or redundancy.

(Required) Install Lustre software.

See Chapter 8, Installing the Lustre Software - Describes preparation steps and a procedure for installing the Lustre software.

(Optional) Configure Lustre Networking (LNet).

See Chapter 9, Configuring Lustre Networking (LNet) - Describes how to configure LNet if the default configuration is not sufficient. By default, LNet will use the first TCP/IP interface it discovers on a system. LNet configuration is required if you are using InfiniBand or multiple Ethernet interfaces.

(Required) Configure the Lustre file system.

See Chapter 10, Configuring a Lustre File System - Provides an example of a simple Lustre configuration procedure and points to tools for completing more complex configurations.

(Optional) Configure Lustre failover.

See Chapter 11, Configuring Failover in a Lustre File System - Describes how to configure Lustre failover.

Table of Contents

This chapter describes hardware configuration requirements for a Lustre file system including:

A Lustre file system can utilize any kind of block storage device such as single disks, software RAID, hardware RAID, or a logical volume manager. In contrast to some networked file systems, the block devices are only attached to the MDS and OSS nodes in a Lustre file system and are not accessed by the clients directly.

Since the block devices are accessed by only one or two server nodes, a storage area network (SAN) that is accessible from all the servers is not required. Expensive switches are not needed because point-to-point connections between the servers and the storage arrays normally provide the simplest and best attachments. (If failover capability is desired, the storage must be attached to multiple servers.)

For a production environment, it is preferable that the MGS have separate storage to allow future expansion to multiple file systems. However, it is possible to run the MDS and MGS on the same machine and have them share the same storage device.

For best performance in a production environment, dedicated clients are required. For a non-production Lustre environment or for testing, a Lustre client and server can run on the same machine. However, dedicated clients are the only supported configuration.

Warning

Performance and recovery issues can occur if you put a client on an MDS or OSS:

Running the OSS and a client on the same machine can cause issues with low memory and memory pressure. If the client consumes all the memory and then tries to write data to the file system, the OSS will need to allocate pages to receive data from the client but will not be able to perform this operation due to low memory. This can cause the client to hang.

Running the MDS and a client on the same machine can cause recovery and deadlock issues and impact the performance of other Lustre clients.

Only servers running on 64-bit CPUs are tested and supported. 64-bit CPU clients are typically used for testing to match expected customer usage and avoid limitations due to the 4 GB limit for RAM size, 1 GB low-memory limitation, and 16 TB file size limit of 32-bit CPUs. Also, due to kernel API limitations, performing backups of Lustre filesystems on 32-bit clients may cause backup tools to confuse files that report the same 32-bit inode number, if the backup tools depend on the inode number for correct operation.

The storage attached to the servers typically uses RAID to provide fault tolerance and can optionally be organized with logical volume management (LVM), which is then formatted as a Lustre file system. Lustre OSS and MDS servers read, write and modify data in the format imposed by the file system.

The Lustre file system uses journaling file system technology on both the MDTs and OSTs. For a MDT, as much as a 20 percent performance gain can be obtained by placing the journal on a separate device.

The MDS can effectively utilize a lot of CPU cycles. A minimum of four processor cores are recommended. More are advisable for files systems with many clients.

Note

Lustre clients running on different CPU architectures is supported. One limitation is that the PAGE_SIZE kernel macro on the client must be as large as the PAGE_SIZE of the server. In particular, ARM or PPC clients with large pages (up to 64kB pages) can run with x86 servers (4kB pages).

MGT storage requirements are small (less than 100 MB even in the largest Lustre file systems), and the data on an MGT is only accessed on a server/client mount, so disk performance is not a consideration. However, this data is vital for file system access, so the MGT should be reliable storage, preferably mirrored RAID1.

MDS storage is accessed in a database-like access pattern with many seeks and read-and-writes of small amounts of data. Storage types that provide much lower seek times, such as SSD or NVMe is strongly preferred for the MDT, and high-RPM SAS is acceptable.

For maximum performance, the MDT should be configured as RAID1 with an internal journal and two disks from different controllers.

If you need a larger MDT, create multiple RAID1 devices from pairs

of disks, and then make a RAID0 array of the RAID1 devices. For ZFS,

use mirror VDEVs for the MDT. This ensures

maximum reliability because multiple disk failures only have a small

chance of hitting both disks in the same RAID1 device.

Doing the opposite (RAID1 of a pair of RAID0 devices) has a 50% chance that even two disk failures can cause the loss of the whole MDT device. The first failure disables an entire half of the mirror and the second failure has a 50% chance of disabling the remaining mirror.

If multiple MDTs are going to be present in the system, each MDT should be specified for the anticipated usage and load. For details on how to add additional MDTs to the filesystem, see Section 14.7, “Adding a New MDT to a Lustre File System”.

Warning

MDT0000 contains the root of the Lustre file system. If MDT0000 is unavailable for any reason, the file system cannot be used.

Note

Using the DNE feature it is possible to dedicate additional

MDTs to sub-directories off the file system root directory stored on

MDT0000, or arbitrarily for lower-level subdirectories, using the

lfs mkdir -i

command. If an MDT serving a subdirectory becomes unavailable, any

subdirectories on that MDT and all directories beneath it will also

become inaccessible. This is typically useful for top-level directories

to assign different users or projects to separate MDTs, or to distribute

other large working sets of files to multiple MDTs.mdt_index

Note

Starting in the 2.8 release it is possible

to spread a single large directory across multiple MDTs using the DNE

striped directory feature by specifying multiple stripes (or shards)

at creation time using the

lfs mkdir -c

command, where stripe_countstripe_count is often the

number of MDTs in the filesystem. Striped directories should

not be used for all directories in the filesystem, since this

incurs extra overhead compared to unstriped directories. This is indended

for specific applications where many output files are being created in

one large directory (over 50k entries).

The data access pattern for the OSS storage is a streaming I/O pattern that is dependent on the access patterns of applications being used. Each OSS can manage multiple object storage targets (OSTs), one for each volume with I/O traffic load-balanced between servers and targets. An OSS should be configured to have a balance between the network bandwidth and the attached storage bandwidth to prevent bottlenecks in the I/O path. Depending on the server hardware, an OSS typically serves between 2 and 8 targets, with each target between 24-48TB, but may be up to 256 terabytes (TBs) in size.

Lustre file system capacity is the sum of the capacities provided by the targets. For example, 64 OSSs, each with two 8 TB OSTs, provide a file system with a capacity of nearly 1 PB. If each OST uses ten 1 TB SATA disks (8 data disks plus 2 parity disks in a RAID-6 configuration), it may be possible to get 50 MB/sec from each drive, providing up to 400 MB/sec of disk bandwidth per OST. If this system is used as storage backend with a system network, such as the InfiniBand network, that provides a similar bandwidth, then each OSS could provide 800 MB/sec of end-to-end I/O throughput. (Although the architectural constraints described here are simple, in practice it takes careful hardware selection, benchmarking and integration to obtain such results.)

The desired performance characteristics of the backing file systems on the MDT and OSTs are independent of one another. The size of the MDT backing file system depends on the number of inodes needed in the total Lustre file system, while the aggregate OST space depends on the total amount of data stored on the file system. If MGS data is to be stored on the MDT device (co-located MGT and MDT), add 100 MB to the required size estimate for the MDT.

Each time a file is created on a Lustre file system, it consumes

one inode on the MDT and one OST object over which the file is striped.

Normally, each file's stripe count is based on the system-wide

default stripe count. However, this can be changed for individual files

using the lfs setstripe option. For more details,

see Chapter 19, Managing File Layout (Striping) and Free

Space.

In a Lustre ldiskfs file system, all the MDT inodes and OST objects are allocated when the file system is first formatted. When the file system is in use and a file is created, metadata associated with that file is stored in one of the pre-allocated inodes and does not consume any of the free space used to store file data. The total number of inodes on a formatted ldiskfs MDT or OST cannot be easily changed. Thus, the number of inodes created at format time should be generous enough to anticipate near term expected usage, with some room for growth without the effort of additional storage.

By default, the ldiskfs file system used by Lustre servers to store user-data objects and system data reserves 5% of space that cannot be used by the Lustre file system. Additionally, an ldiskfs Lustre file system reserves up to 400 MB on each OST, and up to 4GB on each MDT for journal use and a small amount of space outside the journal to store accounting data. This reserved space is unusable for general storage. Thus, at least this much space will be used per OST before any file object data is saved.

With a ZFS backing filesystem for the MDT or OST, the space allocation for inodes and file data is dynamic, and inodes are allocated as needed. A minimum of 4kB of usable space (before mirroring) is needed for each inode, exclusive of other overhead such as directories, internal log files, extended attributes, ACLs, etc. ZFS also reserves approximately 3% of the total storage space for internal and redundant metadata, which is not usable by Lustre. Since the size of extended attributes and ACLs is highly dependent on kernel versions and site-specific policies, it is best to over-estimate the amount of space needed for the desired number of inodes, and any excess space will be utilized to store more inodes.

Less than 100 MB of space is typically required for the MGT. The size is determined by the total number of servers in the Lustre file system cluster(s) that are managed by the MGS.

When calculating the MDT size, the important factor to consider is the number of files to be stored in the file system, which depends on at least 2 KiB per inode of usable space on the MDT. Since MDTs typically use RAID-1+0 mirroring, the total storage needed will be double this.

Please note that the actual used space per MDT depends on the number

of files per directory, the number of stripes per file, whether files

have ACLs or user xattrs, and the number of hard links per file. The

storage required for Lustre file system metadata is typically 1-2

percent of the total file system capacity depending upon file size.

If the Chapter 20, Data on MDT (DoM) feature is in use for Lustre

2.11 or later, MDT space should typically be 5 percent or more of the

total space, depending on the distribution of small files within the

filesystem and the lod.*.dom_stripesize limit on

the MDT and file layout used.

For ZFS-based MDT filesystems, the number of inodes created on the MDT and OST is dynamic, so there is less need to determine the number of inodes in advance, though there still needs to be some thought given to the total MDT space compared to the total filesystem size.

For example, if the average file size is 5 MiB and you have 100 TiB of usable OST space, then you can calculate the minimum total number of inodes for MDTs and OSTs as follows:

(500 TB * 1000000 MB/TB) / 5 MB/inode = 100M inodes

It is recommended that the MDT(s) have at least twice the minimum number of inodes to allow for future expansion and allow for an average file size smaller than expected. Thus, the minimum space for ldiskfs MDT(s) should be approximately:

2 KiB/inode x 100 million inodes x 2 = 400 GiB ldiskfs MDT

For details about formatting options for ldiskfs MDT and OST file systems, see Section 5.3.1, “Setting Formatting Options for an ldiskfs MDT”.

Note

If the median file size is very small, 4 KB for example, the MDT would use as much space for each file as the space used on the OST, so the use of Data-on-MDT is strongly recommended in that case. The MDT space per inode should be increased correspondingly to account for the extra data space usage for each inode:

6 KiB/inode x 100 million inodes x 2 = 1200 GiB ldiskfs MDT

Note

If the MDT has too few inodes, this can cause the space on the

OSTs to be inaccessible since no new files can be created. In this

case, the lfs df -i and df -i

commands will limit the number of available inodes reported for the

filesystem to match the total number of available objects on the OSTs.

Be sure to determine the appropriate MDT size needed to support the

filesystem before formatting. It is possible to increase the

number of inodes after the file system is formatted, depending on the

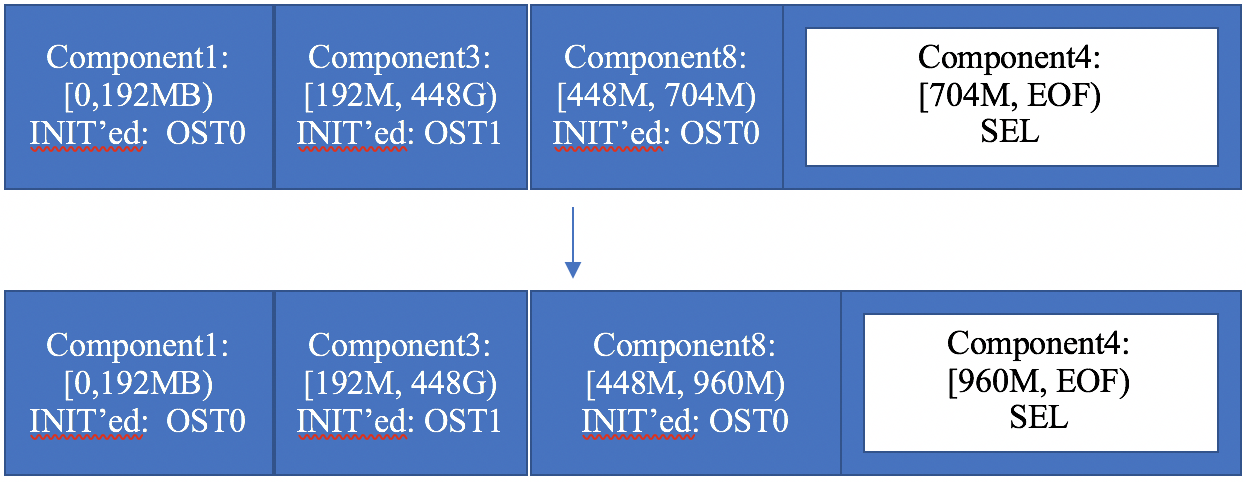

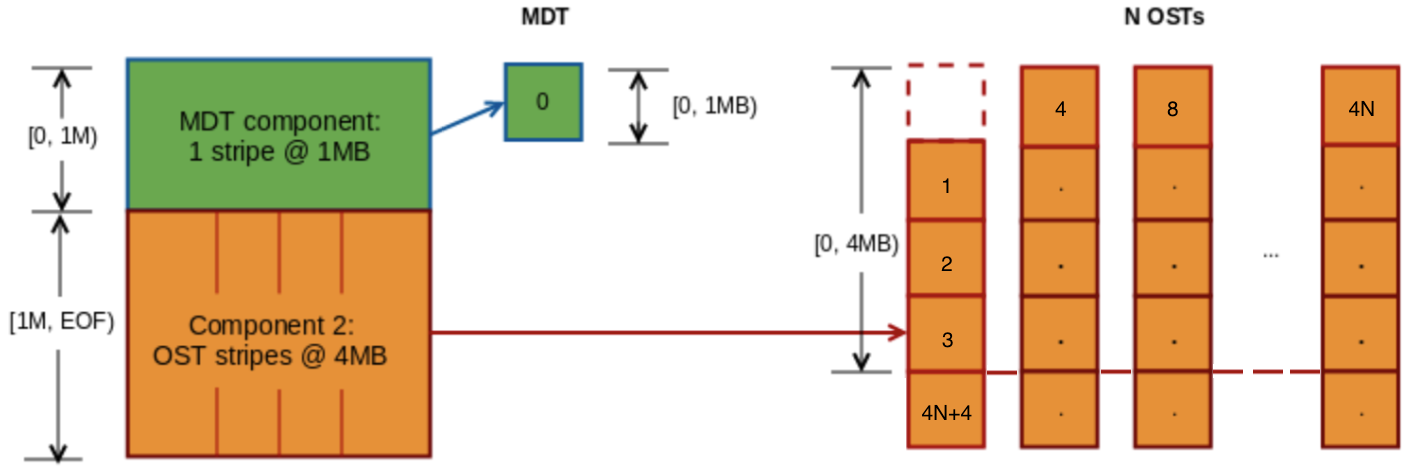

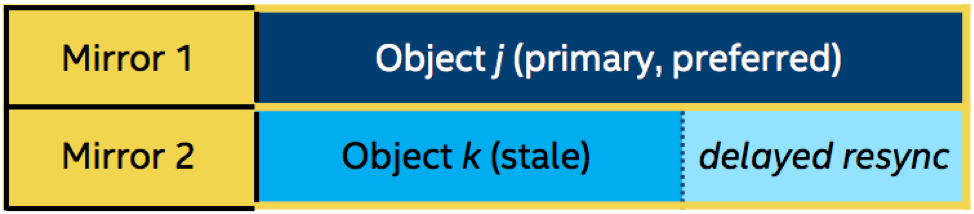

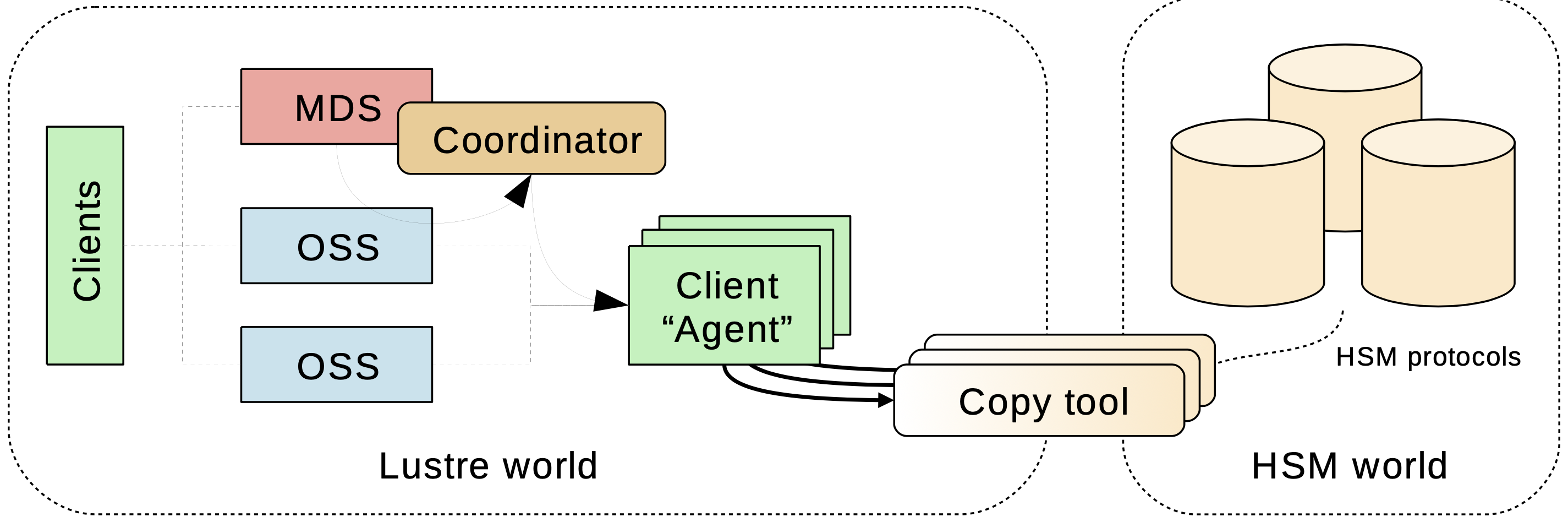

storage. For ldiskfs MDT filesystems the resize2fs